You’re running an application on-premises due to its dependency on non-x86 hardware and want to use AWS

for data backup. Your backup application is only able to write to POSIX-compatible block-based storage. You

have 140TB of data and would like to mount it as a single folder on your file server Users must be able to

access portions of this data while the backups are taking place.

What backup solution would be most appropriate for this use case?

A.

Use Storage Gateway and configure it to use Gateway Cached volumes.

B.

Configure your backup software to use S3 as the target for your data backups.

C.

Configure your backup software to use Glacier as the target for your data backups.

D.

Use Storage Gateway and configure it to use Gateway Stored volumes.

Explanation:

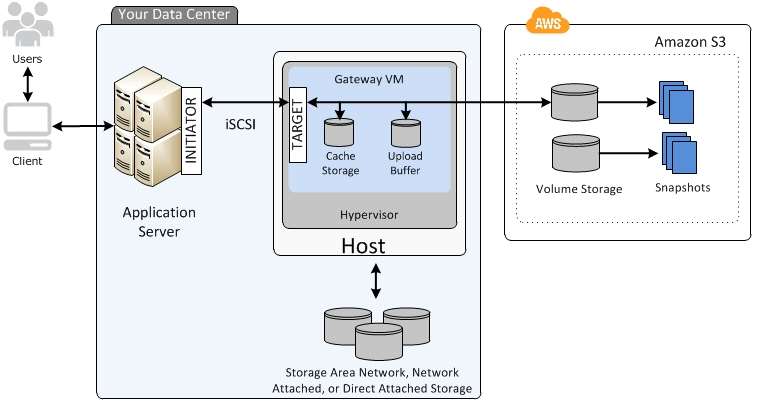

Gateway-Cached Volume Architecture

Gateway-cached volumes let you use Amazon Simple Storage Service (Amazon S3) as your primary data

storage while retaining frequently accessed data locally in your storage gateway. Gateway-cached volumes

minimize the need to scale your on-premises storage infrastructure, while still providing your applications with

low-latency access to their frequently accessed data. You can create storage volumes up to 32 TiB in size and

attach to them as iSCSI devices from your on-premises application servers. Your gateway stores data that you

write to these volumes in Amazon S3 and retains recently read data in your on-premises storage gateway’s

cache and upload buffer storage.

Gateway-cached volumes can range from 1 GiB to 32 TiB in size and must be rounded to the nearest GiB.

Each gateway configured for gateway-cached volumes can support up to 32 volumes for a total maximum

storage volume of 1,024 TiB (1 PiB).

In the gateway-cached volume solution, AWS Storage Gateway stores all your on-premises application data in

a storage volume in Amazon S3.

The following diagram provides an overview of the AWS Storage Gateway-cached volume deployment.After you’ve installed the AWS Storage Gateway software appliance—the virtual machine (VM)—on a host in

your data center and activated it, you can use the AWS Management Console to provision storage volumes

backed by Amazon S3. You can also provision storage volumes programmatically using the AWS Storage

Gateway API or the AWS SDK libraries. You then mount these storage volumes to your on-premises

application servers as iSCSI devices.

You also allocate disks on-premises for the VM. These on-premises disks serve the following purposes:

Disks for use by the gateway as cache storage – As your applications write data to the storage volumes in

AWS, the gateway initially stores the data on the on-premises disks referred to as cache storage before

uploading the data to Amazon S3. The cache storage acts as the on-premises durable store for data that is

waiting to upload to Amazon S3 from the upload buffer.

The cache storage also lets the gateway store your application’s recently accessed data on-premises for low-latency access. If your application requests data, the gateway first checks the cache storage for the data before

checking Amazon S3.

You can use the following guidelines to determine the amount of disk space to allocate for cache storage.

Generally, you should allocate at least 20 percent of your existing file store size as cache storage. Cache

storage should also be larger than the upload buffer. This latter guideline helps ensure cache storage is large

enough to persistently hold all data in the upload buffer that has not yet been uploaded to Amazon S3.

Disks for use by the gateway as the upload buffer – To prepare for upload to Amazon S3, your gateway also

stores incoming data in a staging area, referred to as an upload buffer. Your gateway uploads this buffer data

over an encrypted Secure Sockets Layer (SSL) connection to AWS, where it is stored encrypted in Amazon

S3.

You can take incremental backups, called snapshots, of your storage volumes in Amazon S3. These point-intime snapshots are also stored in Amazon S3 as Amazon EBS snapshots. When you take a new snapshot,

only the data that has changed since your last snapshot is stored. You can initiate snapshots on a scheduled or

one-time basis. When you delete a snapshot, only the data not needed for any other snapshots is removed.

You can restore an Amazon EBS snapshot to a gateway storage volume if you need to recover a backup of

your data. Alternatively, for snapshots up to 16 TiB in size, you can use the snapshot as a starting point for a

new Amazon EBS volume. You can then attach this new Amazon EBS volume to an Amazon EC2 instance.

All gateway-cached volume data and snapshot data is stored in Amazon S3 encrypted at rest using server-side

encryption (SSE). However, you cannot access this data with the Amazon S3 API or other tools such as the

Amazon S3 console.

D

7

1

Why it isn’t A.

Data is hosted on the On-premise server as well. The requirement for 140TB is for file server On-Premise more to confuse and not in AWS. Just need a backup solution hence stored instead of cached volumes

5

1

D for backup question

4

1