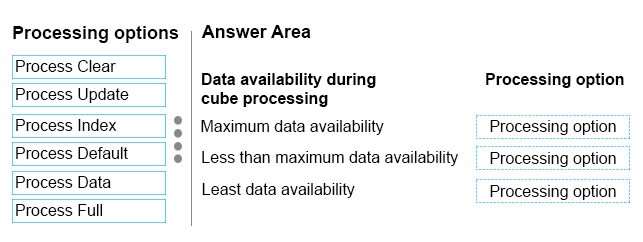

DRAG DROP

Case Study #1

This is a case study. Case studies are not limited separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other question on this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next sections of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question on this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All

Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question.

Background

Wide World Importers imports and sells clothing. The company has a multidimensional Microsoft SQL Server

Analysis Services instance. The server has 80 gigabytes (GB) of available physical memory. The following installed services are running on the server:

SQL Server Database Engine

SQL Server Analysis Services (multidimensional)

The database engine instance has been configured for a hard cap of 50 GB, and it cannot be lowered. The instance contains the following cubes: SalesAnalysis, OrderAnalysis.

Reports that are generated based on data from the OrderAnalysis cube take more time to complete when they are generated in the afternoon each day. You examine the server and observe that it is under significant memory pressure.

Processing for all cubes must occur automatically in increments. You create one job to process the cubes and another job to process the dimensions. You must configure a processing task for each job that optimizes performance. As the cubes grown in size, the overnight processing of the cubes often do not complete during the allowed maintenance time window.

SalesAnalysis

The SalesAnalysis cube is currently being tested before being used in production. Users report that day name attribute values are sorted alphabetically. Day name attribute values must be sorted chronologically. Users report that they are unable to query the cube while any cube processing operations are in progress. You need to maximize data availability during cube processing and ensure that you process both dimensions and measures.OrderAnalysis

The OrderAnalysis cube is used for reporting and ad-hoc queries from Microsoft Excel. The data warehouse team adds a new table named Fact.Transaction to the cube. The Fact.Transaction table includes a column named Total Including Tax. You must add a new measure named Transactions – Total Including Tax to the cube. The measure must be calculated as the sum of the Total Including Tax column across any selected relevant dimensions.

Finance

The Finance cube is used to analyze General Ledger entries for the company.

Requirements

You must minimize the time that it takes to process cubes while meeting the following requirements:

The Sales cube requires overnight processing of dimensions, cubes, measure groups, and partitions.

The OrderAnalysis cube requires overnight processing of dimensions only.

The Finance cube requires overnight processing of dimensions only.

You need to resolve the issues that the users report.

Which processing options should you use? To answer, drag the appropriate processing option to the correct location or locations. Each processing option may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

Select and Place:

data in the object, and then processes the object. This kind of processing is required when a structural change has been made to an object, for example, when an attribute hierarchy is added, deleted, or renamed. partially processed objects to a fully processed state. If you change a data binding, Process Default will do a indexes on related partitions will be dropped. is dropped, it is not reloaded. partitions, it will be dropped before re-populating the partition with source data. objects, this option generates an error.

Explanation:

Box1: Process Full:

When Process Full is executed against an object that has already been processed, Analysis Services drops all

Box 2: Process Default

Detects the process state of database objects, and performs processing necessary to deliver unprocessed or

Process Full on the affected object.

Box 3:

Not Process Update: Forces a re-read of data and an update of dimension attributes. Flexible aggregations and

Incorrect Answers:

Not Process Clear: Drops the data in the object specified and any lower-level constituent objects. After the data

Not Process Data: Processes data only without building aggregations or indexes. If there is data is in the

Not Process Index: Creates or rebuilds indexes and aggregations for all processed partitions. For unprocessed

Processing with this option is needed if you turn off Lazy Processing.

References:https://docs.microsoft.com/en-us/sql/analysis-services/multidimensional-models/processingoptions-and-settings-analysis-services

Shouldn’t be the opposite process full offers the least data availability?

5

0

I think they put process full on cube processing for max data availability because it keep process cube as single transaction in same job. Client users still can query old data version when job is running. it will set exclusive lock at the end of full cube processing and commit this transaction(very quick). The job will execute longest comparing with other process types but can keep data availability.

5

0

process update only can work on dimensions. However this question ask process cube issue. I think last answer should be process data.

8

0