You need to design a data storage strategy for each application

###BeginCaseStudy###

Case Study: 2

Trey Research

Background

Overview

Trey Research conducts agricultural research and sells the results to the agriculture and food

industries. The company uses a combination of on-premises and third-party server clusters to

meet its storage needs. Trey Research has seasonal demands on its services, with up to 50

percent drops in data capacity and bandwidth demand during low-demand periods. They plan

to host their websites in an agile, cloud environment where the company can deploy and

remove its websites based on its business requirements rather than the requirements of the

hosting company.

A recent fire near the datacenter that Trey Research uses raises the management team’s

awareness of the vulnerability of hosting all of the company’s websites and data at any single

location. The management team is concerned about protecting its data from loss as a result of

a disaster.

Websites

Trey Research has a portfolio of 300 websites and associated background processes that are

currently hosted in a third-party datacenter. All of the websites are written in ASP.NET, and

the background processes use Windows Services. The hosting environment costs Trey

Research approximately S25 million in hosting and maintenance fees.

Infrastructure

Trey Research also has on-premises servers that run VMs to support line-of-business

applications. The company wants to migrate the line-of-business applications to the cloud,

one application at a time. The company is migrating most of its production VMs from an

aging VMWare ESXi farm to a Hyper-V cluster that runs on Windows Server 2012.

Applications

DistributionTracking

Trey Research has a web application named Distributiontracking. This application constantly

collects realtime data that tracks worldwide distribution points to customer retail sites. This

data is available to customers at all times.

The company wants to ensure that the distribution tracking data is stored at a location that is

geographically close to the customers who will be using the information. The system must

continue running in the event of VM failures without corrupting data. The system is

processor intensive and should be run in a multithreading environment.

HRApp

The company has a human resources (HR) application named HRApp that stores data in an

on-premises SQL Server database. The database must have at least two copies, but data to

support backups and business continuity must stay in Trey Research locations only. The data

must remain on-premises and cannot be stored in the cloud.

HRApp was written by a third party, and the code cannot be modified. The human resources

data is used by all business offices, and each office requires access to the entire database.Users report that HRApp takes all night to generate the required payroll reports, and they

would like to reduce this time.

MetricsTracking

Trey Research has an application named MetricsTracking that is used to track analytics for

the DistributionTracking web application. The data MetricsTracking collects is not customerfacing. Data is stored on an on-premises SQL Server database, but this data should be moved

to the cloud. Employees at other locations access this data by using a remote desktop

connection to connect to the application, but latency issues degrade the functionality.

Trey Research wants a solution that allows remote employees to access metrics data without

using a remote desktop connection. MetricsTracking was written in-house, and the

development team is available to make modifications to the application if necessary.

However, the company wants to continue to use SQL Server for MetricsTracking.

Business Requirements

Business Continuity

You have the following requirements:

• Move all customer-facing data to the cloud.

• Web servers should be backed up to geographically separate locations,

• If one website becomes unavailable, customers should automatically be routed to

websites that are still operational.

• Data must be available regardless of the operational status of any particular website.

• The HRApp system must remain on-premises and must be backed up.

• The MetricsTracking data must be replicated so that it is locally available to all Trey

Research offices.

Auditing and Security

You have the following requirements:

• Both internal and external consumers should be able to access research results.

• Internal users should be able to access data by using their existing company

credentials without requiring multiple logins.

• Consumers should be able to access the service by using their Microsoft credentials.

• Applications written to access the data must be authenticated.

• Access and activity must be monitored and audited.

• Ensure the security and integrity of the data collected from the worldwide distribution

points for the distribution tracking application.

Storage and Processing

You have the following requirements:

• Provide real-time analysis of distribution tracking data by geographic location.

• Collect and store large datasets in real-time data for customer use.

• Locate the distribution tracking data as close to the central office as possible to

improve bandwidth.

• Co-locate the distribution tracking data as close to the customer as possible based on

the customer’s location.

• Distribution tracking data must be stored in the JSON format and indexed by

metadata that is stored in a SQL Server database.

• Data in the cloud must be stored in geographically separate locations, but kept with

the same political boundaries.

Technical RequirementsMigration

You have the following requirements:

• Deploy all websites to Azure.

• Replace on-premises and third-party physical server clusters with cloud-based

solutions.

• Optimize the speed for retrieving exiting JSON objects that contain the distribution

tracking data.

• Recommend strategies for partitioning data for load balancing.

Auditing and Security

You have the following requirements:

• Use Active Directory for internal and external authentication.

• Use OAuth for application authentication.

Business Continuity

You have the following requirements:

• Data must be backed up to separate geographic locations.

• Web servers must run concurrent versions of all websites in distinct geographic

locations.

• Use Azure to back up the on-premises MetricsTracking data.

• Use Azure virtual machines as a recovery platform for MetricsTracking and HRApp.

• Ensure that there is at least one additional on-premises recovery environment for the

HRApp.

###EndCaseStudy###

HOTSPOT

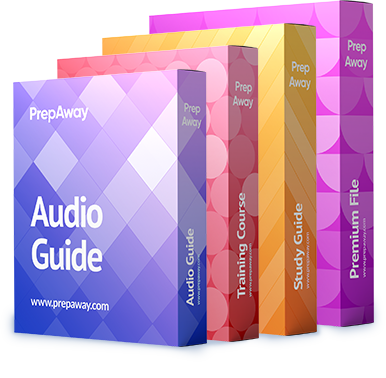

You need to design a data storage strategy for each application.

In the table below, identify the strategy that you should use for each application. Make only one

selection in each column.

You need to recommend a test strategy for the disaster recovery system

###BeginCaseStudy###

Case Study: 2

Trey Research

Background

Overview

Trey Research conducts agricultural research and sells the results to the agriculture and food

industries. The company uses a combination of on-premises and third-party server clusters to

meet its storage needs. Trey Research has seasonal demands on its services, with up to 50

percent drops in data capacity and bandwidth demand during low-demand periods. They plan

to host their websites in an agile, cloud environment where the company can deploy and

remove its websites based on its business requirements rather than the requirements of the

hosting company.

A recent fire near the datacenter that Trey Research uses raises the management team’s

awareness of the vulnerability of hosting all of the company’s websites and data at any single

location. The management team is concerned about protecting its data from loss as a result of

a disaster.

Websites

Trey Research has a portfolio of 300 websites and associated background processes that are

currently hosted in a third-party datacenter. All of the websites are written in ASP.NET, and

the background processes use Windows Services. The hosting environment costs Trey

Research approximately S25 million in hosting and maintenance fees.

Infrastructure

Trey Research also has on-premises servers that run VMs to support line-of-business

applications. The company wants to migrate the line-of-business applications to the cloud,

one application at a time. The company is migrating most of its production VMs from an

aging VMWare ESXi farm to a Hyper-V cluster that runs on Windows Server 2012.

Applications

DistributionTracking

Trey Research has a web application named Distributiontracking. This application constantly

collects realtime data that tracks worldwide distribution points to customer retail sites. This

data is available to customers at all times.

The company wants to ensure that the distribution tracking data is stored at a location that is

geographically close to the customers who will be using the information. The system must

continue running in the event of VM failures without corrupting data. The system is

processor intensive and should be run in a multithreading environment.

HRApp

The company has a human resources (HR) application named HRApp that stores data in an

on-premises SQL Server database. The database must have at least two copies, but data to

support backups and business continuity must stay in Trey Research locations only. The data

must remain on-premises and cannot be stored in the cloud.

HRApp was written by a third party, and the code cannot be modified. The human resources

data is used by all business offices, and each office requires access to the entire database.Users report that HRApp takes all night to generate the required payroll reports, and they

would like to reduce this time.

MetricsTracking

Trey Research has an application named MetricsTracking that is used to track analytics for

the DistributionTracking web application. The data MetricsTracking collects is not customerfacing. Data is stored on an on-premises SQL Server database, but this data should be moved

to the cloud. Employees at other locations access this data by using a remote desktop

connection to connect to the application, but latency issues degrade the functionality.

Trey Research wants a solution that allows remote employees to access metrics data without

using a remote desktop connection. MetricsTracking was written in-house, and the

development team is available to make modifications to the application if necessary.

However, the company wants to continue to use SQL Server for MetricsTracking.

Business Requirements

Business Continuity

You have the following requirements:

• Move all customer-facing data to the cloud.

• Web servers should be backed up to geographically separate locations,

• If one website becomes unavailable, customers should automatically be routed to

websites that are still operational.

• Data must be available regardless of the operational status of any particular website.

• The HRApp system must remain on-premises and must be backed up.

• The MetricsTracking data must be replicated so that it is locally available to all Trey

Research offices.

Auditing and Security

You have the following requirements:

• Both internal and external consumers should be able to access research results.

• Internal users should be able to access data by using their existing company

credentials without requiring multiple logins.

• Consumers should be able to access the service by using their Microsoft credentials.

• Applications written to access the data must be authenticated.

• Access and activity must be monitored and audited.

• Ensure the security and integrity of the data collected from the worldwide distribution

points for the distribution tracking application.

Storage and Processing

You have the following requirements:

• Provide real-time analysis of distribution tracking data by geographic location.

• Collect and store large datasets in real-time data for customer use.

• Locate the distribution tracking data as close to the central office as possible to

improve bandwidth.

• Co-locate the distribution tracking data as close to the customer as possible based on

the customer’s location.

• Distribution tracking data must be stored in the JSON format and indexed by

metadata that is stored in a SQL Server database.

• Data in the cloud must be stored in geographically separate locations, but kept with

the same political boundaries.

Technical RequirementsMigration

You have the following requirements:

• Deploy all websites to Azure.

• Replace on-premises and third-party physical server clusters with cloud-based

solutions.

• Optimize the speed for retrieving exiting JSON objects that contain the distribution

tracking data.

• Recommend strategies for partitioning data for load balancing.

Auditing and Security

You have the following requirements:

• Use Active Directory for internal and external authentication.

• Use OAuth for application authentication.

Business Continuity

You have the following requirements:

• Data must be backed up to separate geographic locations.

• Web servers must run concurrent versions of all websites in distinct geographic

locations.

• Use Azure to back up the on-premises MetricsTracking data.

• Use Azure virtual machines as a recovery platform for MetricsTracking and HRApp.

• Ensure that there is at least one additional on-premises recovery environment for the

HRApp.

###EndCaseStudy###

DRAG DROP

You need to recommend a test strategy for the disaster recovery system.

What should you do? To answer, drag the appropriate test strategy to the correct business

application. Each test strategy may be used once, more than once, or not at all. You may need to

drag the split bar between panes or scroll to view content.

You need to configure the distribution tracking application

###BeginCaseStudy###

Case Study: 2

Trey Research

Background

Overview

Trey Research conducts agricultural research and sells the results to the agriculture and food

industries. The company uses a combination of on-premises and third-party server clusters to

meet its storage needs. Trey Research has seasonal demands on its services, with up to 50

percent drops in data capacity and bandwidth demand during low-demand periods. They plan

to host their websites in an agile, cloud environment where the company can deploy and

remove its websites based on its business requirements rather than the requirements of the

hosting company.

A recent fire near the datacenter that Trey Research uses raises the management team’s

awareness of the vulnerability of hosting all of the company’s websites and data at any single

location. The management team is concerned about protecting its data from loss as a result of

a disaster.

Websites

Trey Research has a portfolio of 300 websites and associated background processes that are

currently hosted in a third-party datacenter. All of the websites are written in ASP.NET, and

the background processes use Windows Services. The hosting environment costs Trey

Research approximately S25 million in hosting and maintenance fees.

Infrastructure

Trey Research also has on-premises servers that run VMs to support line-of-business

applications. The company wants to migrate the line-of-business applications to the cloud,

one application at a time. The company is migrating most of its production VMs from an

aging VMWare ESXi farm to a Hyper-V cluster that runs on Windows Server 2012.

Applications

DistributionTracking

Trey Research has a web application named Distributiontracking. This application constantly

collects realtime data that tracks worldwide distribution points to customer retail sites. This

data is available to customers at all times.

The company wants to ensure that the distribution tracking data is stored at a location that is

geographically close to the customers who will be using the information. The system must

continue running in the event of VM failures without corrupting data. The system is

processor intensive and should be run in a multithreading environment.

HRApp

The company has a human resources (HR) application named HRApp that stores data in an

on-premises SQL Server database. The database must have at least two copies, but data to

support backups and business continuity must stay in Trey Research locations only. The data

must remain on-premises and cannot be stored in the cloud.

HRApp was written by a third party, and the code cannot be modified. The human resources

data is used by all business offices, and each office requires access to the entire database.Users report that HRApp takes all night to generate the required payroll reports, and they

would like to reduce this time.

MetricsTracking

Trey Research has an application named MetricsTracking that is used to track analytics for

the DistributionTracking web application. The data MetricsTracking collects is not customerfacing. Data is stored on an on-premises SQL Server database, but this data should be moved

to the cloud. Employees at other locations access this data by using a remote desktop

connection to connect to the application, but latency issues degrade the functionality.

Trey Research wants a solution that allows remote employees to access metrics data without

using a remote desktop connection. MetricsTracking was written in-house, and the

development team is available to make modifications to the application if necessary.

However, the company wants to continue to use SQL Server for MetricsTracking.

Business Requirements

Business Continuity

You have the following requirements:

• Move all customer-facing data to the cloud.

• Web servers should be backed up to geographically separate locations,

• If one website becomes unavailable, customers should automatically be routed to

websites that are still operational.

• Data must be available regardless of the operational status of any particular website.

• The HRApp system must remain on-premises and must be backed up.

• The MetricsTracking data must be replicated so that it is locally available to all Trey

Research offices.

Auditing and Security

You have the following requirements:

• Both internal and external consumers should be able to access research results.

• Internal users should be able to access data by using their existing company

credentials without requiring multiple logins.

• Consumers should be able to access the service by using their Microsoft credentials.

• Applications written to access the data must be authenticated.

• Access and activity must be monitored and audited.

• Ensure the security and integrity of the data collected from the worldwide distribution

points for the distribution tracking application.

Storage and Processing

You have the following requirements:

• Provide real-time analysis of distribution tracking data by geographic location.

• Collect and store large datasets in real-time data for customer use.

• Locate the distribution tracking data as close to the central office as possible to

improve bandwidth.

• Co-locate the distribution tracking data as close to the customer as possible based on

the customer’s location.

• Distribution tracking data must be stored in the JSON format and indexed by

metadata that is stored in a SQL Server database.

• Data in the cloud must be stored in geographically separate locations, but kept with

the same political boundaries.

Technical RequirementsMigration

You have the following requirements:

• Deploy all websites to Azure.

• Replace on-premises and third-party physical server clusters with cloud-based

solutions.

• Optimize the speed for retrieving exiting JSON objects that contain the distribution

tracking data.

• Recommend strategies for partitioning data for load balancing.

Auditing and Security

You have the following requirements:

• Use Active Directory for internal and external authentication.

• Use OAuth for application authentication.

Business Continuity

You have the following requirements:

• Data must be backed up to separate geographic locations.

• Web servers must run concurrent versions of all websites in distinct geographic

locations.

• Use Azure to back up the on-premises MetricsTracking data.

• Use Azure virtual machines as a recovery platform for MetricsTracking and HRApp.

• Ensure that there is at least one additional on-premises recovery environment for the

HRApp.

###EndCaseStudy###

You need to configure the distribution tracking application.

What should you do?

Which four Azure PowerShell scripts should you run in sequence?

###BeginCaseStudy###

Case Study: 3

Contoso, Ltd

Background

Overview

Contoso, Ltd., manufactures and sells golf clubs and golf balls. Contoso also sells golf

accessories under the Contoso Golf and Odyssey brands worldwide.

Most of the company’s IT infrastructure is located in the company’s Carlsbad, California,

headquarters. Contoso also has a sizable third-party colocation datacenter that costs the

company USD $30,000 to $40,000 a month. Contoso has other servers scattered around the

United States.

Contoso, Ltd., has the following goals:

• Move many consumer-facing websites, enterprise databases, and enterprise web

services to Azure.

• Improve the performance for customers and resellers who are access company

websites from around the world.

• Provide support for provisioning resources to meet bursts of demand.

• Consolidate and improve the utilization of website- and database-hosting resources.

• Avoid downtime, particularly that caused by web and database server updating.

• Leverage familiarity with Microsoft server management tools.

Infrastructure

Contoso’s datacenters are filled with dozens of smaller web servers and databases that run on

under-utilized hardware. This creates issues for data backup. Contoso currently backs up data

to tape by using System Center Data Protection Manager. System Center Operations Manager

is not deployed in the enterprise.

All of the servers are expensive to acquire and maintain, and scaling the infrastructure takes

significant time. Contoso conducts weekly server maintenance, which causes downtime for

some of its global offices. Special events, such as high-profile golf tournaments, create a

large increase in site traffic. Contoso has difficulty scaling the web-hosting environment fast

enough to meet these surges in site traffic.

Contoso has resellers and consumers in Japan and China. These resellers must use

applications that run in a datacenter that is located in the state of Texas, in the United States.

Because of the physical distance, the resellers experience slow response times and downtime.

Business Requirements

Management and Performance

Management

• Web servers and databases must automatically apply updates to the operating system

and products.

• Automatically monitor the health of worldwide sites, databases, and virtual machines.• Automatically back up the website and databases.

• Manage hosted resources by using on-premises tools.

Performance

• The management team would like to centralize data backups and eliminate the use of

tapes.

• The website must automatically scale without code changes or redeployment.

• Support changes in service tier without reconfiguration or redeployment.

• Site-hosting must automatically scale to accommodate data bandwidth and number of

connections.

• Scale databases without requiring migration to a larger server.

• Migrate business critical applications to Azure.

• Migrate databases to the cloud and centralize databases where possible.

Business Continuity and Support

Business Continuity

• Minimize downtime in the event of regional disasters.

• Recover data if unintentional modifications or deletions are discovered.

• Run the website on multiple web server instances to minimize downtime and support

a high service level agreement (SLA).

Connectivity

• Allow enterprise web services to access data and other services located on-premises.

• Provide and monitor lowest latency possible to website visitors.

• Automatically balance traffic among all web servers.

• Provide secure transactions for users of both legacy and modern browsers.

• Provide automated auditing and reporting of web servers and databases.

• Support single sign-on from multiple domains.

Development Environment

You identify the following requirements for the development environment:

• Support the current development team’s knowledge of Microsoft web development

and SQL Service tools.

• Support building experimental applications by using data from the Azure deployment

and on-premises data sources.

• Mitigate the need to purchase additional tools for monitoring and debugging.

• System designers and architects must be able to create custom Web APIs without

requiring any coding.

• Support automatic website deployment from source control.

• Support automated build verification and testing to mitigate bugs introduced during

builds.

• Manage website versions across all deployments.

• Ensure that website versions are consistent across all deployments.

Technical Requirement

Management and Performance

Management

• Use build automation to deploy directly from Visual Studio.

• Use build-time versioning of assets and builds/releases.• Automate common IT tasks such as VM creation by using Windows PowerShell

workflows.

• Use advanced monitoring features and reports of workloads in Azure by using

existing Microsoft tools.

Performance

• Websites must automatically load balance across multiple servers to adapt to varying

traffic.

• In production, websites must run on multiple instances.

• First-time published websites must be published by using Visual Studio and scaled to

a single instance to test publishing.

• Data storage must support automatic load balancing across multiple servers.

• Websites must adapt to wide increases in traffic during special events.

• Azure virtual machines (VMs) must be created in the same datacenter when

applicable.

Business Continuity and Support

Business Continuity

• Automatically co-locate data and applications in different geographic locations.

• Provide real-time reporting of changes to critical data and binaries.

• Provide real-time alerts of security exceptions.

• Unwanted deletions or modifications of data must be reversible for up to one month,

especially in business critical applications and databases.

• Any cloud-hosted servers must be highly available.

Enterprise Support

• The solution must use stored procedures to access on-premises SQL Server data from

Azure.

• A debugger must automatically attach to websites on a weekly basis. The scripts that

handle the configuration and setup of debugging cannot work if there is a delay in

attaching the debugger.

###EndCaseStudy###

DRAG DROP

You need to deploy the virtual machines to Azure.

Which four Azure PowerShell scripts should you run in sequence? To answer, move the appropriate

scripts from the list of scripts to the answer area and arrange them in the correct order.

Which tools should you use?

###BeginCaseStudy###

Case Study: 3

Contoso, Ltd

Background

Overview

Contoso, Ltd., manufactures and sells golf clubs and golf balls. Contoso also sells golf

accessories under the Contoso Golf and Odyssey brands worldwide.

Most of the company’s IT infrastructure is located in the company’s Carlsbad, California,

headquarters. Contoso also has a sizable third-party colocation datacenter that costs the

company USD $30,000 to $40,000 a month. Contoso has other servers scattered around the

United States.

Contoso, Ltd., has the following goals:

• Move many consumer-facing websites, enterprise databases, and enterprise web

services to Azure.

• Improve the performance for customers and resellers who are access company

websites from around the world.

• Provide support for provisioning resources to meet bursts of demand.

• Consolidate and improve the utilization of website- and database-hosting resources.

• Avoid downtime, particularly that caused by web and database server updating.

• Leverage familiarity with Microsoft server management tools.

Infrastructure

Contoso’s datacenters are filled with dozens of smaller web servers and databases that run on

under-utilized hardware. This creates issues for data backup. Contoso currently backs up data

to tape by using System Center Data Protection Manager. System Center Operations Manager

is not deployed in the enterprise.

All of the servers are expensive to acquire and maintain, and scaling the infrastructure takes

significant time. Contoso conducts weekly server maintenance, which causes downtime for

some of its global offices. Special events, such as high-profile golf tournaments, create a

large increase in site traffic. Contoso has difficulty scaling the web-hosting environment fast

enough to meet these surges in site traffic.

Contoso has resellers and consumers in Japan and China. These resellers must use

applications that run in a datacenter that is located in the state of Texas, in the United States.

Because of the physical distance, the resellers experience slow response times and downtime.

Business Requirements

Management and Performance

Management

• Web servers and databases must automatically apply updates to the operating system

and products.

• Automatically monitor the health of worldwide sites, databases, and virtual machines.• Automatically back up the website and databases.

• Manage hosted resources by using on-premises tools.

Performance

• The management team would like to centralize data backups and eliminate the use of

tapes.

• The website must automatically scale without code changes or redeployment.

• Support changes in service tier without reconfiguration or redeployment.

• Site-hosting must automatically scale to accommodate data bandwidth and number of

connections.

• Scale databases without requiring migration to a larger server.

• Migrate business critical applications to Azure.

• Migrate databases to the cloud and centralize databases where possible.

Business Continuity and Support

Business Continuity

• Minimize downtime in the event of regional disasters.

• Recover data if unintentional modifications or deletions are discovered.

• Run the website on multiple web server instances to minimize downtime and support

a high service level agreement (SLA).

Connectivity

• Allow enterprise web services to access data and other services located on-premises.

• Provide and monitor lowest latency possible to website visitors.

• Automatically balance traffic among all web servers.

• Provide secure transactions for users of both legacy and modern browsers.

• Provide automated auditing and reporting of web servers and databases.

• Support single sign-on from multiple domains.

Development Environment

You identify the following requirements for the development environment:

• Support the current development team’s knowledge of Microsoft web development

and SQL Service tools.

• Support building experimental applications by using data from the Azure deployment

and on-premises data sources.

• Mitigate the need to purchase additional tools for monitoring and debugging.

• System designers and architects must be able to create custom Web APIs without

requiring any coding.

• Support automatic website deployment from source control.

• Support automated build verification and testing to mitigate bugs introduced during

builds.

• Manage website versions across all deployments.

• Ensure that website versions are consistent across all deployments.

Technical Requirement

Management and Performance

Management

• Use build automation to deploy directly from Visual Studio.

• Use build-time versioning of assets and builds/releases.• Automate common IT tasks such as VM creation by using Windows PowerShell

workflows.

• Use advanced monitoring features and reports of workloads in Azure by using

existing Microsoft tools.

Performance

• Websites must automatically load balance across multiple servers to adapt to varying

traffic.

• In production, websites must run on multiple instances.

• First-time published websites must be published by using Visual Studio and scaled to

a single instance to test publishing.

• Data storage must support automatic load balancing across multiple servers.

• Websites must adapt to wide increases in traffic during special events.

• Azure virtual machines (VMs) must be created in the same datacenter when

applicable.

Business Continuity and Support

Business Continuity

• Automatically co-locate data and applications in different geographic locations.

• Provide real-time reporting of changes to critical data and binaries.

• Provide real-time alerts of security exceptions.

• Unwanted deletions or modifications of data must be reversible for up to one month,

especially in business critical applications and databases.

• Any cloud-hosted servers must be highly available.

Enterprise Support

• The solution must use stored procedures to access on-premises SQL Server data from

Azure.

• A debugger must automatically attach to websites on a weekly basis. The scripts that

handle the configuration and setup of debugging cannot work if there is a delay in

attaching the debugger.

###EndCaseStudy###

HOTSPOT

You need implement tools at the client’s location for monitoring and deploying Azure resources.

Which tools should you use? To answer, select the appropriate on-premises tool for each task in the

answer area.

What should you implement?

###BeginCaseStudy###

Case Study: 3

Contoso, Ltd

Background

Overview

Contoso, Ltd., manufactures and sells golf clubs and golf balls. Contoso also sells golf

accessories under the Contoso Golf and Odyssey brands worldwide.

Most of the company’s IT infrastructure is located in the company’s Carlsbad, California,

headquarters. Contoso also has a sizable third-party colocation datacenter that costs the

company USD $30,000 to $40,000 a month. Contoso has other servers scattered around the

United States.

Contoso, Ltd., has the following goals:

• Move many consumer-facing websites, enterprise databases, and enterprise web

services to Azure.

• Improve the performance for customers and resellers who are access company

websites from around the world.

• Provide support for provisioning resources to meet bursts of demand.

• Consolidate and improve the utilization of website- and database-hosting resources.

• Avoid downtime, particularly that caused by web and database server updating.

• Leverage familiarity with Microsoft server management tools.

Infrastructure

Contoso’s datacenters are filled with dozens of smaller web servers and databases that run on

under-utilized hardware. This creates issues for data backup. Contoso currently backs up data

to tape by using System Center Data Protection Manager. System Center Operations Manager

is not deployed in the enterprise.

All of the servers are expensive to acquire and maintain, and scaling the infrastructure takes

significant time. Contoso conducts weekly server maintenance, which causes downtime for

some of its global offices. Special events, such as high-profile golf tournaments, create a

large increase in site traffic. Contoso has difficulty scaling the web-hosting environment fast

enough to meet these surges in site traffic.

Contoso has resellers and consumers in Japan and China. These resellers must use

applications that run in a datacenter that is located in the state of Texas, in the United States.

Because of the physical distance, the resellers experience slow response times and downtime.

Business Requirements

Management and Performance

Management

• Web servers and databases must automatically apply updates to the operating system

and products.

• Automatically monitor the health of worldwide sites, databases, and virtual machines.• Automatically back up the website and databases.

• Manage hosted resources by using on-premises tools.

Performance

• The management team would like to centralize data backups and eliminate the use of

tapes.

• The website must automatically scale without code changes or redeployment.

• Support changes in service tier without reconfiguration or redeployment.

• Site-hosting must automatically scale to accommodate data bandwidth and number of

connections.

• Scale databases without requiring migration to a larger server.

• Migrate business critical applications to Azure.

• Migrate databases to the cloud and centralize databases where possible.

Business Continuity and Support

Business Continuity

• Minimize downtime in the event of regional disasters.

• Recover data if unintentional modifications or deletions are discovered.

• Run the website on multiple web server instances to minimize downtime and support

a high service level agreement (SLA).

Connectivity

• Allow enterprise web services to access data and other services located on-premises.

• Provide and monitor lowest latency possible to website visitors.

• Automatically balance traffic among all web servers.

• Provide secure transactions for users of both legacy and modern browsers.

• Provide automated auditing and reporting of web servers and databases.

• Support single sign-on from multiple domains.

Development Environment

You identify the following requirements for the development environment:

• Support the current development team’s knowledge of Microsoft web development

and SQL Service tools.

• Support building experimental applications by using data from the Azure deployment

and on-premises data sources.

• Mitigate the need to purchase additional tools for monitoring and debugging.

• System designers and architects must be able to create custom Web APIs without

requiring any coding.

• Support automatic website deployment from source control.

• Support automated build verification and testing to mitigate bugs introduced during

builds.

• Manage website versions across all deployments.

• Ensure that website versions are consistent across all deployments.

Technical Requirement

Management and Performance

Management

• Use build automation to deploy directly from Visual Studio.

• Use build-time versioning of assets and builds/releases.• Automate common IT tasks such as VM creation by using Windows PowerShell

workflows.

• Use advanced monitoring features and reports of workloads in Azure by using

existing Microsoft tools.

Performance

• Websites must automatically load balance across multiple servers to adapt to varying

traffic.

• In production, websites must run on multiple instances.

• First-time published websites must be published by using Visual Studio and scaled to

a single instance to test publishing.

• Data storage must support automatic load balancing across multiple servers.

• Websites must adapt to wide increases in traffic during special events.

• Azure virtual machines (VMs) must be created in the same datacenter when

applicable.

Business Continuity and Support

Business Continuity

• Automatically co-locate data and applications in different geographic locations.

• Provide real-time reporting of changes to critical data and binaries.

• Provide real-time alerts of security exceptions.

• Unwanted deletions or modifications of data must be reversible for up to one month,

especially in business critical applications and databases.

• Any cloud-hosted servers must be highly available.

Enterprise Support

• The solution must use stored procedures to access on-premises SQL Server data from

Azure.

• A debugger must automatically attach to websites on a weekly basis. The scripts that

handle the configuration and setup of debugging cannot work if there is a delay in

attaching the debugger.

###EndCaseStudy###

You need to configure availability for the virtual machines that the company is migrating to Azure.

What should you implement?

What should you recommend?

###BeginCaseStudy###

Case Study: 3

Contoso, Ltd

Background

Overview

Contoso, Ltd., manufactures and sells golf clubs and golf balls. Contoso also sells golf

accessories under the Contoso Golf and Odyssey brands worldwide.

Most of the company’s IT infrastructure is located in the company’s Carlsbad, California,

headquarters. Contoso also has a sizable third-party colocation datacenter that costs the

company USD $30,000 to $40,000 a month. Contoso has other servers scattered around the

United States.

Contoso, Ltd., has the following goals:

• Move many consumer-facing websites, enterprise databases, and enterprise web

services to Azure.

• Improve the performance for customers and resellers who are access company

websites from around the world.

• Provide support for provisioning resources to meet bursts of demand.

• Consolidate and improve the utilization of website- and database-hosting resources.

• Avoid downtime, particularly that caused by web and database server updating.

• Leverage familiarity with Microsoft server management tools.

Infrastructure

Contoso’s datacenters are filled with dozens of smaller web servers and databases that run on

under-utilized hardware. This creates issues for data backup. Contoso currently backs up data

to tape by using System Center Data Protection Manager. System Center Operations Manager

is not deployed in the enterprise.

All of the servers are expensive to acquire and maintain, and scaling the infrastructure takes

significant time. Contoso conducts weekly server maintenance, which causes downtime for

some of its global offices. Special events, such as high-profile golf tournaments, create a

large increase in site traffic. Contoso has difficulty scaling the web-hosting environment fast

enough to meet these surges in site traffic.

Contoso has resellers and consumers in Japan and China. These resellers must use

applications that run in a datacenter that is located in the state of Texas, in the United States.

Because of the physical distance, the resellers experience slow response times and downtime.

Business Requirements

Management and Performance

Management

• Web servers and databases must automatically apply updates to the operating system

and products.

• Automatically monitor the health of worldwide sites, databases, and virtual machines.• Automatically back up the website and databases.

• Manage hosted resources by using on-premises tools.

Performance

• The management team would like to centralize data backups and eliminate the use of

tapes.

• The website must automatically scale without code changes or redeployment.

• Support changes in service tier without reconfiguration or redeployment.

• Site-hosting must automatically scale to accommodate data bandwidth and number of

connections.

• Scale databases without requiring migration to a larger server.

• Migrate business critical applications to Azure.

• Migrate databases to the cloud and centralize databases where possible.

Business Continuity and Support

Business Continuity

• Minimize downtime in the event of regional disasters.

• Recover data if unintentional modifications or deletions are discovered.

• Run the website on multiple web server instances to minimize downtime and support

a high service level agreement (SLA).

Connectivity

• Allow enterprise web services to access data and other services located on-premises.

• Provide and monitor lowest latency possible to website visitors.

• Automatically balance traffic among all web servers.

• Provide secure transactions for users of both legacy and modern browsers.

• Provide automated auditing and reporting of web servers and databases.

• Support single sign-on from multiple domains.

Development Environment

You identify the following requirements for the development environment:

• Support the current development team’s knowledge of Microsoft web development

and SQL Service tools.

• Support building experimental applications by using data from the Azure deployment

and on-premises data sources.

• Mitigate the need to purchase additional tools for monitoring and debugging.

• System designers and architects must be able to create custom Web APIs without

requiring any coding.

• Support automatic website deployment from source control.

• Support automated build verification and testing to mitigate bugs introduced during

builds.

• Manage website versions across all deployments.

• Ensure that website versions are consistent across all deployments.

Technical Requirement

Management and Performance

Management

• Use build automation to deploy directly from Visual Studio.

• Use build-time versioning of assets and builds/releases.• Automate common IT tasks such as VM creation by using Windows PowerShell

workflows.

• Use advanced monitoring features and reports of workloads in Azure by using

existing Microsoft tools.

Performance

• Websites must automatically load balance across multiple servers to adapt to varying

traffic.

• In production, websites must run on multiple instances.

• First-time published websites must be published by using Visual Studio and scaled to

a single instance to test publishing.

• Data storage must support automatic load balancing across multiple servers.

• Websites must adapt to wide increases in traffic during special events.

• Azure virtual machines (VMs) must be created in the same datacenter when

applicable.

Business Continuity and Support

Business Continuity

• Automatically co-locate data and applications in different geographic locations.

• Provide real-time reporting of changes to critical data and binaries.

• Provide real-time alerts of security exceptions.

• Unwanted deletions or modifications of data must be reversible for up to one month,

especially in business critical applications and databases.

• Any cloud-hosted servers must be highly available.

Enterprise Support

• The solution must use stored procedures to access on-premises SQL Server data from

Azure.

• A debugger must automatically attach to websites on a weekly basis. The scripts that

handle the configuration and setup of debugging cannot work if there is a delay in

attaching the debugger.

###EndCaseStudy###

DRAG DROP

You need to recommend network connectivity solutions for the experimental applications.

What should you recommend? To answer, drag the appropriate solution to the correct network

connection requirements. Each solution may be used once, more than once, or not at all. You may

need to drag the split bar between panes or scroll to view content.

Which two actions should you perform?

###BeginCaseStudy###

Case Study: 3

Contoso, Ltd

Background

Overview

Contoso, Ltd., manufactures and sells golf clubs and golf balls. Contoso also sells golf

accessories under the Contoso Golf and Odyssey brands worldwide.

Most of the company’s IT infrastructure is located in the company’s Carlsbad, California,

headquarters. Contoso also has a sizable third-party colocation datacenter that costs the

company USD $30,000 to $40,000 a month. Contoso has other servers scattered around the

United States.

Contoso, Ltd., has the following goals:

• Move many consumer-facing websites, enterprise databases, and enterprise web

services to Azure.

• Improve the performance for customers and resellers who are access company

websites from around the world.

• Provide support for provisioning resources to meet bursts of demand.

• Consolidate and improve the utilization of website- and database-hosting resources.

• Avoid downtime, particularly that caused by web and database server updating.

• Leverage familiarity with Microsoft server management tools.

Infrastructure

Contoso’s datacenters are filled with dozens of smaller web servers and databases that run on

under-utilized hardware. This creates issues for data backup. Contoso currently backs up data

to tape by using System Center Data Protection Manager. System Center Operations Manager

is not deployed in the enterprise.

All of the servers are expensive to acquire and maintain, and scaling the infrastructure takes

significant time. Contoso conducts weekly server maintenance, which causes downtime for

some of its global offices. Special events, such as high-profile golf tournaments, create a

large increase in site traffic. Contoso has difficulty scaling the web-hosting environment fast

enough to meet these surges in site traffic.

Contoso has resellers and consumers in Japan and China. These resellers must use

applications that run in a datacenter that is located in the state of Texas, in the United States.

Because of the physical distance, the resellers experience slow response times and downtime.

Business Requirements

Management and Performance

Management

• Web servers and databases must automatically apply updates to the operating system

and products.

• Automatically monitor the health of worldwide sites, databases, and virtual machines.• Automatically back up the website and databases.

• Manage hosted resources by using on-premises tools.

Performance

• The management team would like to centralize data backups and eliminate the use of

tapes.

• The website must automatically scale without code changes or redeployment.

• Support changes in service tier without reconfiguration or redeployment.

• Site-hosting must automatically scale to accommodate data bandwidth and number of

connections.

• Scale databases without requiring migration to a larger server.

• Migrate business critical applications to Azure.

• Migrate databases to the cloud and centralize databases where possible.

Business Continuity and Support

Business Continuity

• Minimize downtime in the event of regional disasters.

• Recover data if unintentional modifications or deletions are discovered.

• Run the website on multiple web server instances to minimize downtime and support

a high service level agreement (SLA).

Connectivity

• Allow enterprise web services to access data and other services located on-premises.

• Provide and monitor lowest latency possible to website visitors.

• Automatically balance traffic among all web servers.

• Provide secure transactions for users of both legacy and modern browsers.

• Provide automated auditing and reporting of web servers and databases.

• Support single sign-on from multiple domains.

Development Environment

You identify the following requirements for the development environment:

• Support the current development team’s knowledge of Microsoft web development

and SQL Service tools.

• Support building experimental applications by using data from the Azure deployment

and on-premises data sources.

• Mitigate the need to purchase additional tools for monitoring and debugging.

• System designers and architects must be able to create custom Web APIs without

requiring any coding.

• Support automatic website deployment from source control.

• Support automated build verification and testing to mitigate bugs introduced during

builds.

• Manage website versions across all deployments.

• Ensure that website versions are consistent across all deployments.

Technical Requirement

Management and Performance

Management

• Use build automation to deploy directly from Visual Studio.

• Use build-time versioning of assets and builds/releases.• Automate common IT tasks such as VM creation by using Windows PowerShell

workflows.

• Use advanced monitoring features and reports of workloads in Azure by using

existing Microsoft tools.

Performance

• Websites must automatically load balance across multiple servers to adapt to varying

traffic.

• In production, websites must run on multiple instances.

• First-time published websites must be published by using Visual Studio and scaled to

a single instance to test publishing.

• Data storage must support automatic load balancing across multiple servers.

• Websites must adapt to wide increases in traffic during special events.

• Azure virtual machines (VMs) must be created in the same datacenter when

applicable.

Business Continuity and Support

Business Continuity

• Automatically co-locate data and applications in different geographic locations.

• Provide real-time reporting of changes to critical data and binaries.

• Provide real-time alerts of security exceptions.

• Unwanted deletions or modifications of data must be reversible for up to one month,

especially in business critical applications and databases.

• Any cloud-hosted servers must be highly available.

Enterprise Support

• The solution must use stored procedures to access on-premises SQL Server data from

Azure.

• A debugger must automatically attach to websites on a weekly basis. The scripts that

handle the configuration and setup of debugging cannot work if there is a delay in

attaching the debugger.

###EndCaseStudy###

You need to recommend a solution for publishing one of the company websites to Azure and

configuring it for remote debugging.

Which two actions should you perform? Each correct answer presents part of the solution.

Which three applications should you monitor?

###BeginCaseStudy###

Case Study: 4

Lucerne Publishing

Background

Overview

Lucerne Publishing creates, stores, and delivers online media for advertising companies. This

media is streamed to computers by using the web, and to mobile devices around the world by

using native applications. The company currently supports the iOS, Android, and Windows

Phone 8.1 platform.

Lucerne Publishing uses proprietary software to manage its media workflow. This software

has reached the end of its lifecycle. The company plans to move its media workflows to the

cloud. Lucerne Publishing provides access to its customers, who are third-party companies,

so that they can download, upload, search, and index media that is stored on Lucerne

Publishing servers.

Apps and Applications

Lucerne Publishing develops the applications that customers use to deliver media. The

company currently provides the following media delivery applications:

• Lucerne Media W – a web application that delivers media by using any browser

• Lucerne Media M – a mobile app that delivers media by using Windows Phone 8.1

• Lucerne Media A – a mobile app that delivers media by using an iOS device

• Lucerne Media N – a mobile app that delivers media by using an Android device

• Lucerne Media D – a desktop client application that customer’s install on their local

computer

Business Requirements

Lucerne Publishing’s customers and their consumers have the following requirements:

• Access to media must be time-constricted once media is delivered to a consumer.

• The time required to download media to mobile devices must be minimized.• Customers must have 24-hour access to media downloads regardless of their location

or time zone.

• Lucerne Publishing must be able to monitor the performance and usage of its

customer-facing app.

Lucerne Publishing wants to make its asset catalog searchable without requiring a database

redesign.

• Customers must be able to access all data by using a web application. They must also

be able to access data by using a mobile app that is provided by Lucerne Publishing.

• Customers must be able to search for media assets by key words and media type.

• Lucerne Publishing wants to move the asset catalog database to the cloud without

formatting the source data.

Other Requirements

Development

Code and current development documents must be backed up at all times. All solutions must

be automatically built and deployed to Azure when code is checked in to source control.

Network Optimization

Lucerne Publishing has a .NET web application that runs on Azure. The web application

analyzes storage and the distribution of its media assets. It needs to monitor the utilization of

the web application. Ultimately, Lucerne Publishing hopes to cut its costs by reducing data

replication without sacrificing its quality of service to its customers. The solution has the

following requirements:

• Optimize the storage location and amount of duplication of media.

• Vary several parameters including the number of data nodes and the distance from

node to customers.

• Minimize network bandwidth.

• Lucerne Publishing wants be notified of exceptions in the web application.

Technical Requirements

Data Mining

Lucerne Publishing constantly mines its data to identify customer patterns. The company

plans to replace the existing on-premises cluster with a cloud-based solution. Lucerne

Publishing has the following requirements:

Virtual machines:

• The data mining solution must support the use of hundreds to thousands of processing

cores.

• Minimize the number of virtual machines by using more powerful virtual machines.

Each virtual machine must always have eight or more processor cores available.

• Allow the number of processor cores dedicated to an analysis to grow and shrink

automatically based on the demand of the analysis.

• Virtual machines must use remote memory direct access to improve performance.

Task scheduling:

The solution must automatically schedule jobs. The scheduler must distribute the jobs based

on the demand and available resources.

Data analysis results:

The solution must provide a web service that allows applications to access the results of

analyses.

Other RequirementsFeature Support

• Ad copy data must be searchable in full text.

• Ad copy data must indexed to optimize search speed.

• Media metadata must be stored in Azure Table storage.

• Media files must be stored in Azure BLOB storage.

• The customer-facing website must have access to all ad copy and media.

• The customer-facing website must automatically scale and replicate to locations

around the world.

• Media and data must be replicated around the world to decrease the latency of data

transfers.

• Media uploads must have fast data transfer rates (low latency) without the need to

upload the data offline.

Security

• Customer access must be managed by using Active Directory.

• Media files must be encrypted by using the PlayReady encryption method.

• Customers must be able to upload media quickly and securely over a private

connection with no opportunity for internet snooping.

###EndCaseStudy###

You need to analyze Lucerne’s performance monitoring solution.

Which three applications should you monitor? Each correct answer presents a complete solution.

You need to architect the global website strategy to meet the business requirements

###BeginCaseStudy###

Case Study: 4

Lucerne Publishing

Background

Overview

Lucerne Publishing creates, stores, and delivers online media for advertising companies. This

media is streamed to computers by using the web, and to mobile devices around the world by

using native applications. The company currently supports the iOS, Android, and Windows

Phone 8.1 platform.

Lucerne Publishing uses proprietary software to manage its media workflow. This software

has reached the end of its lifecycle. The company plans to move its media workflows to the

cloud. Lucerne Publishing provides access to its customers, who are third-party companies,

so that they can download, upload, search, and index media that is stored on Lucerne

Publishing servers.

Apps and Applications

Lucerne Publishing develops the applications that customers use to deliver media. The

company currently provides the following media delivery applications:

• Lucerne Media W – a web application that delivers media by using any browser

• Lucerne Media M – a mobile app that delivers media by using Windows Phone 8.1

• Lucerne Media A – a mobile app that delivers media by using an iOS device

• Lucerne Media N – a mobile app that delivers media by using an Android device

• Lucerne Media D – a desktop client application that customer’s install on their local

computer

Business Requirements

Lucerne Publishing’s customers and their consumers have the following requirements:

• Access to media must be time-constricted once media is delivered to a consumer.

• The time required to download media to mobile devices must be minimized.• Customers must have 24-hour access to media downloads regardless of their location

or time zone.

• Lucerne Publishing must be able to monitor the performance and usage of its

customer-facing app.

Lucerne Publishing wants to make its asset catalog searchable without requiring a database

redesign.

• Customers must be able to access all data by using a web application. They must also

be able to access data by using a mobile app that is provided by Lucerne Publishing.

• Customers must be able to search for media assets by key words and media type.

• Lucerne Publishing wants to move the asset catalog database to the cloud without

formatting the source data.

Other Requirements

Development

Code and current development documents must be backed up at all times. All solutions must

be automatically built and deployed to Azure when code is checked in to source control.

Network Optimization

Lucerne Publishing has a .NET web application that runs on Azure. The web application

analyzes storage and the distribution of its media assets. It needs to monitor the utilization of

the web application. Ultimately, Lucerne Publishing hopes to cut its costs by reducing data

replication without sacrificing its quality of service to its customers. The solution has the

following requirements:

• Optimize the storage location and amount of duplication of media.

• Vary several parameters including the number of data nodes and the distance from

node to customers.

• Minimize network bandwidth.

• Lucerne Publishing wants be notified of exceptions in the web application.

Technical Requirements

Data Mining

Lucerne Publishing constantly mines its data to identify customer patterns. The company

plans to replace the existing on-premises cluster with a cloud-based solution. Lucerne

Publishing has the following requirements:

Virtual machines:

• The data mining solution must support the use of hundreds to thousands of processing

cores.

• Minimize the number of virtual machines by using more powerful virtual machines.

Each virtual machine must always have eight or more processor cores available.

• Allow the number of processor cores dedicated to an analysis to grow and shrink

automatically based on the demand of the analysis.

• Virtual machines must use remote memory direct access to improve performance.

Task scheduling:

The solution must automatically schedule jobs. The scheduler must distribute the jobs based

on the demand and available resources.

Data analysis results:

The solution must provide a web service that allows applications to access the results of

analyses.

Other RequirementsFeature Support

• Ad copy data must be searchable in full text.

• Ad copy data must indexed to optimize search speed.

• Media metadata must be stored in Azure Table storage.

• Media files must be stored in Azure BLOB storage.

• The customer-facing website must have access to all ad copy and media.

• The customer-facing website must automatically scale and replicate to locations

around the world.

• Media and data must be replicated around the world to decrease the latency of data

transfers.

• Media uploads must have fast data transfer rates (low latency) without the need to

upload the data offline.

Security

• Customer access must be managed by using Active Directory.

• Media files must be encrypted by using the PlayReady encryption method.

• Customers must be able to upload media quickly and securely over a private

connection with no opportunity for internet snooping.

###EndCaseStudy###

HOTSPOT

The company has two corporate offices. Customers will access the websites from datacenters

around the world.

You need to architect the global website strategy to meet the business requirements. Use the dropdown menus to select the answer choice that answers each question.