Which parameters should you add to usp_AddXMLOrder on line 04 and line 05?

###BeginCaseStudy###

Case Study: 6

Coho Winery

Overview

You are a database developer for a company named Coho Winery. Coho Winery has an

office in London.

Coho Winery has an application that is used to process purchase orders from customers and

retailers in 10 different countries.

The application uses a web front end to process orders from the Internet. The web front end

adds orders to a database named Sales. The Sales database is managed by a server named

Server1.

An empty copy of the Sales database is created on a server named Server2 in the London

office. The database will store sales data for customers in Europe.

A new version of the application is being developed. In the new version, orders will be placed

either by using the existing web front end or by loading an XML file.

Once a week, you receive two files that contain the purchase orders and the order details of

orders from offshore facilities.

You run the usp_ImportOders stored procedure and the usp_ImportOrderDetails stored

procedure to copy the offshore facility orders to the Sales database.

The Sales database contains a table named Orders that has more than 20 million rows.

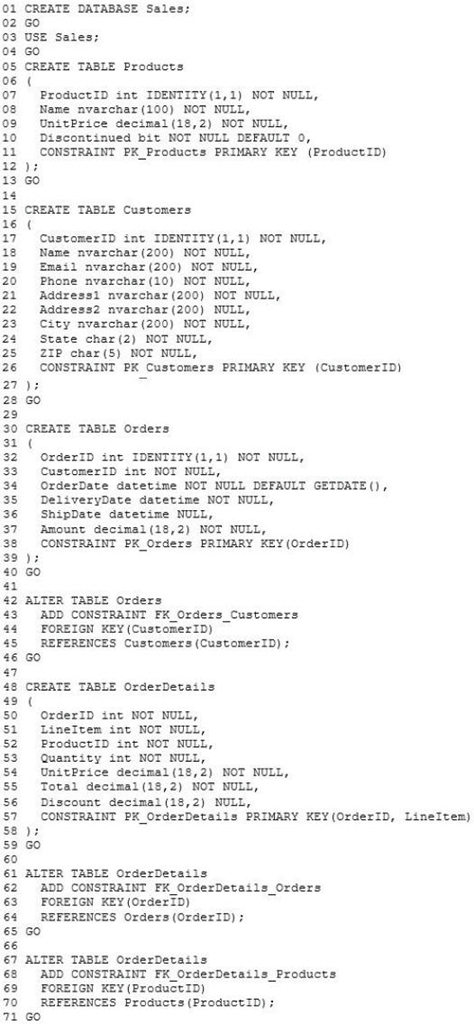

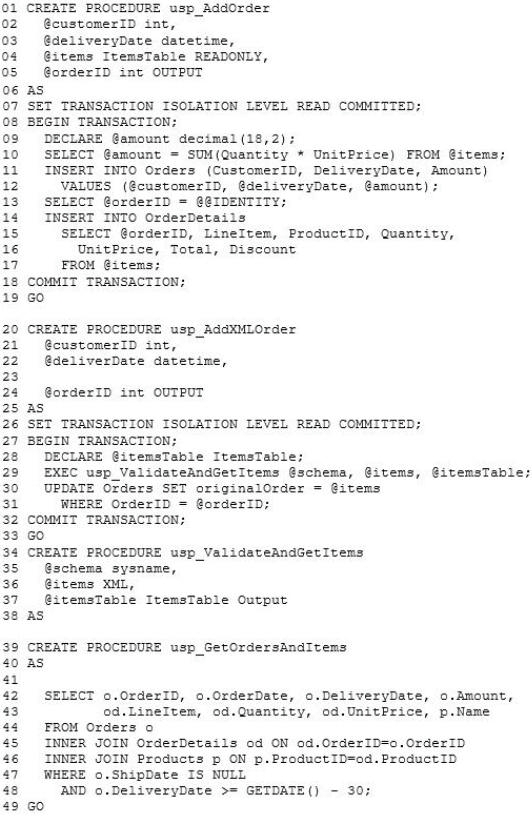

Database Definitions

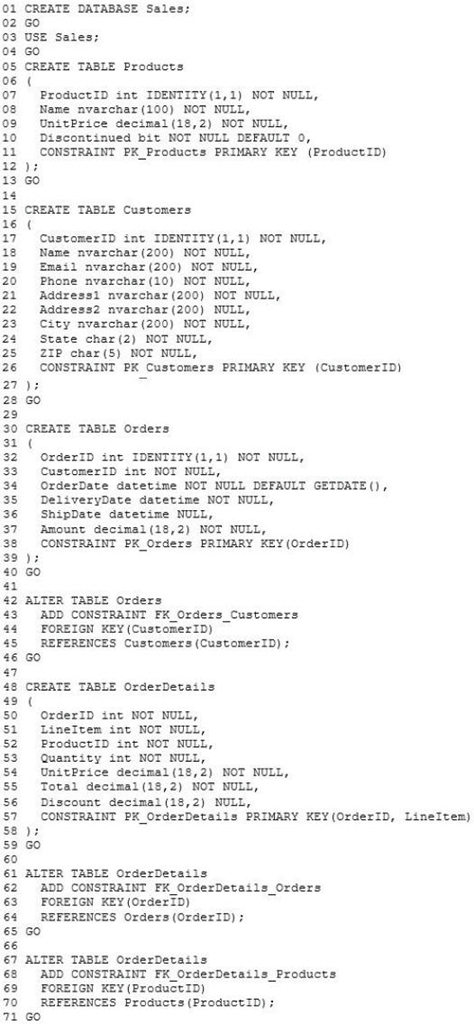

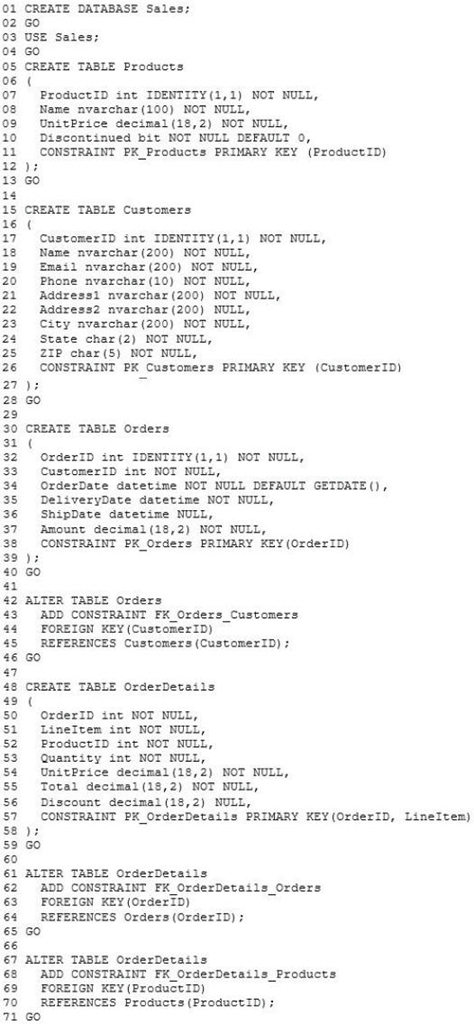

Database and Tables

The following scripts are used to create the database and its tables:

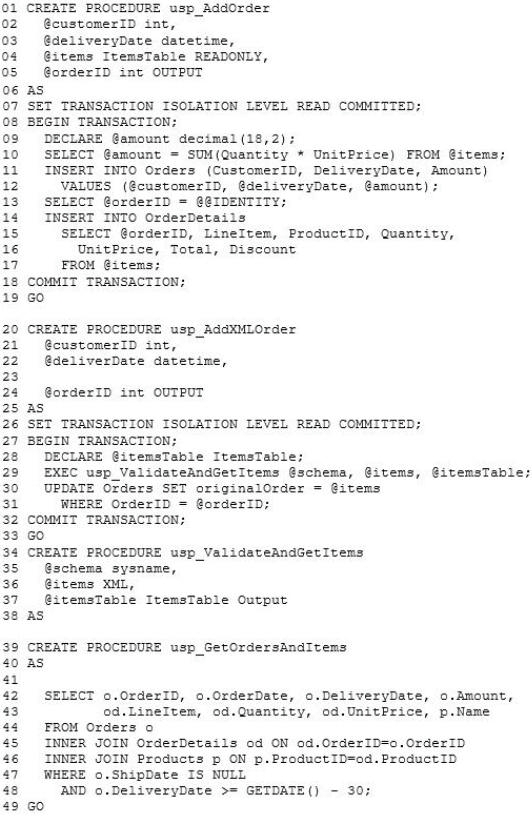

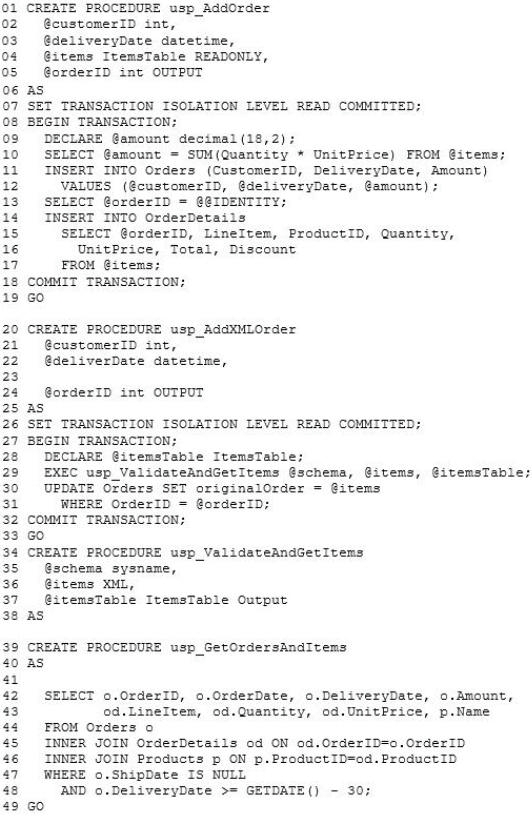

Stored Procedures

The following are the definitions of the stored procedures used in the database:

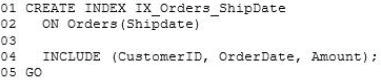

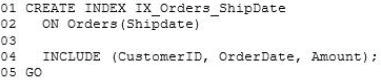

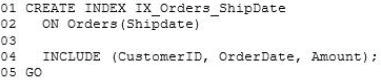

Indexes

The following indexes are part of the Sales database:

Data Import

The XML files will contain the list of items in each order. Each retailer will have its own

XML schema and will be able to use different types of encoding. Each XML schema will use

a default namespace. The default namespaces are not guaranteed to be unique.

For testing purposes, you receive an XSD file from a customer.

For testing purposes, you also create an XML schema collection named ValidateOrder.

ValidateOrder contains schemas for all of the retailers.

The new version of the application must validate the XML file, parse the data, and store the

parsed data along with the original XML file in the database. The original XML file must be

stored without losing any data.

Reported Issues

Performance Issues

You notice the following for the usp_GetOrdersAndItems stored procedure:

• The stored procedure takes a long time to complete.

• Less than two percent of the rows in the Orders table are retrieved by

usp_GetOrdersAndItems.

• A full table scan runs when the stored procedure executes.

• The amount of disk space used and the amount of time required to insert data are very

high.

You notice that the usp_GetOrdersByProduct stored procedure uses a table scan when the

stored procedure is executed.

Page Split Issues

Updates to the Orders table cause excessive page splits on the IX_Orders_ShipDate index.

Requirements

Site Requirements

Users located in North America must be able to view sales data for customers in North

America and Europe in a single report. The solution must minimize the amount of traffic over

the WAN link between the offices.

Bulk Insert Requirements

The usp_ImportOrderDetails stored procedure takes more than 10 minutes to complete. The

stored procedure runs daily. If the stored procedure fails, you must ensure that the stored

procedure restarts from the last successful set of rows.

Index Monitoring Requirements

The usage of indexes in the Sales database must be monitored continuously. Monitored data

must be maintained if a server restarts. The monitoring solution must minimize the usage of

memory resources and processing resources.

###EndCaseStudy###

You need to ensure that usp_AddXMLOrder can be used to validate the XML input from

there tailers.

Which parameters should you add to usp_AddXMLOrder on line 04 and line 05? (Each

correct answer presents part of the solution. Choose all that apply.)

Which design approach should you use?

###BeginCaseStudy###

Case Study: 4

WingTip Toys

General Background

You are a data architect for WingTip Toys. The company uses SQL Server 2012 Enterprise

edition. SQL Server Analysis Services (SSAS) and SQL Server Reporting Services (SSRS)

are installed on separate servers.

Data Warehouse

The company’s data warehouse initially contained less than 100 MB and 100 million rows of

data from only one data source. It now contains more than 10 TB and 10 billion rows of data,

in 25 tables, from 12 data sources.

The largest table in the data warehouse, the factOrders table, contains 5 TB of data. The

factOrders table contains three date keys: OrderDateKey InvoiceDateKey, and ShipDateKey.

The data warehouse server has 1 TB of RAM. Memory usage is currently at 20 percent.

One billion rows of data are added to the data warehouse each month. New data is copied

each night from the data sources into SQL Server staging tables, and existing records are not

updated. The largest data set is order information, which is loaded in parallel into multiple

staging tables, one for each data source. All the staging tables have the same structure and

belong to the same filegroup as the factOrders table.

The dimCustomers table stores customer information that may change over time.

Data Models

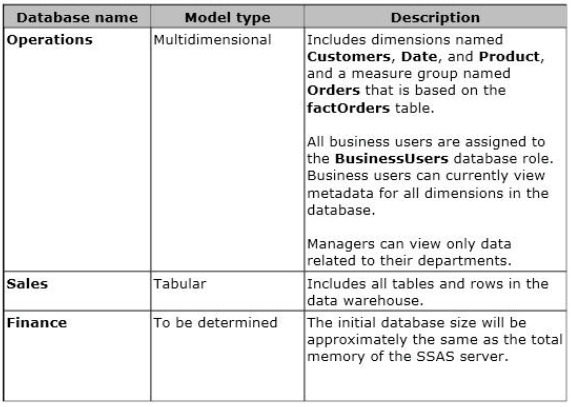

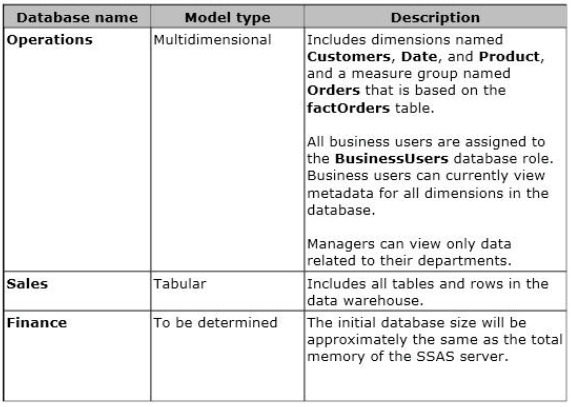

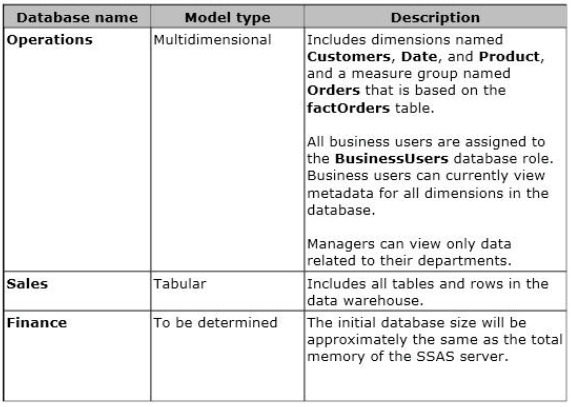

You are developing three SSAS databases, as described in the following table.

Reporting

Business users frequently generate reports in Microsoft Excel by using PowerPivot. The

PowerPivot Management Dashboard does not currently display any usage data.

Several SSRS reports exist that use the data warehouse as a source. The data warehouse

queries are aggregate queries that use the factOrders table and one or more dimension tables.

All SSRS data sources use Integrated Windows authentication.

SSRS displays a security access error message when managers run SSRS reports based on the

Operations database.

Reporting performance has become unacceptably slow.

Business Requirements

Improve the query speed of the SSRS reports.

Allow business users to create reports by using PowerPivot and Power View.

Ensure that all users other than business users can view metadata for the Customers

dimension. Ensure that business users cannot view metadata for the Customers dimension.

Technical Requirements

Modify the tables in the data warehouse to minimize aggregate query processing time.

Minimize disk storage in the data warehouse.

Ensure that all multidimensional models process data as quickly as possible.

Create a fact table named factCustomerContact in the data warehouse to store the contact

date, customer key, and communication type for each instance of customer contact.

Store the history of customer information changes in the dimCustomers table.

Move data from the staging tables into the factOrders table as quickly as possible. When

creating dimensions for the date keys in the factOrders table, minimize storage space

requirements and optimize the cube processing time.

Ensure that queries against the Sales database return the most current data in the data

warehouse.

Ensure that the SSAS model of the Finance database does not page to disk or return a

memory error as the size of the database grows.

Create an SSAS monitoring solution that tracks the following data:

• Queries answered per second

• Queries from cache direct per second

• Queries from file per second.

###EndCaseStudy###

You need to design the dimCustomers table.

Which design approach should you use?

You need to ensure that subscriptions can be delivered by using email

###BeginCaseStudy###

Case Study: 1

Tailspin Toys

Background

Tailspin Toys is a multinational company that manufactures toys. Tailspin Toys has offices in

five regions worldwide. The company sells toys at various retail stores. The company also

sells toys directly to consumers through a web site.

The company has the following departments:

• Sales

• Distribution

• Manufacturing

Each department has an office in each region.

The fiscal calendar of Tailspin Toys runs from June to May.

The network contains a server farm that has Microsoft SharePoint Server 2013 installed.

Existing Environment

Current Database Environment

Each department uses SharePoint team sites for internal collaboration.

All manufacturing information is stored in a relational database named Manufacturing. All

sales information is stored in a relational database named Sales.

Tailspin Toys deploys SQL Server Analysis Services (SSAS) and configures SSAS to use

tabular models. SSAS will be used for all sales reports.

Tailspin Toys deploys a SQL Server Reporting Services (SSRS) instance in SharePoint

mode.

Sales Database

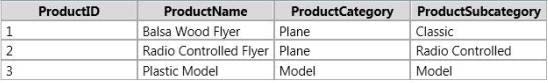

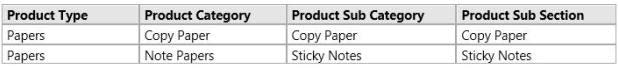

A database named Sales contains two tables named FactSales and DimProduct. FactSales

contains the following columns:

• SalesID

• Total Due

• OrderDate

DimProduct contains the following columns:

• ProductID

• ProductName

• ProductCategory

• ProductSubcategory

The Sales database contains information about the products. Most of the products have a

category and a subcategory. Certain products only have a category.

A sample from DimProduct is shown in the following table.

Requirements

Security Requirements

Tailspin Toys identifies the following security requirement:

• Sales department users must be allowed to view the sales transactions from their

region only.

• Sales department users must be able to view the contents of the manufacturing

reports.

• Manufacturing department users must be able to create new manufacturing reports.

• Third-party and custom solutions must NOT be deployed to the reporting server.

• Sales department users must NOT be able to create new manufacturing reports.

Planned Reporting Implementation

The manufacturing department plans to use the SSRS instance for its reports. The

manufacturing department also plans to make its reports accessible from SharePoint. All

manufacturing reports will use an existing database named Manufacturing.

Reporting Requirements

Tailspin Toys identifies the following reporting requirements:

• All reports must contain the company logo and a header that contains the date and the

time that the report was executed.

• All reports must be created by using the SQL Server Data Tools.

Manufacturing report

You plan to create a report named Manufacturinglssues.rdl. The report has the following

requirements:

• Manufacturing department managers must be able to view product issues by product

type, manufacturing plant location, and error type.

• The manufacturing department managers must be able to change views by choosing

options from drop-down lists.

Sales reports

You plan to create a sales report named RegionalSales.rdl. The report has the following

requirements:

• Users must be able to view the report by using a web browser. By default,

subcategories and product details must be hidden when using the browser.

• Users must be able to subscribe to receive the report by email. The report must be sent

by email as a PDF attachment.

You plan to create a quarterly sales report named QuarterSales.rdl. The report must display

sales data by fiscal quarter.

Technical Requirements

Tailspin Toys identifies the following technical requirements:

• Products in the DimProduct table that do NOT have a subcategory must use the

category value as the subcategory value.

• SSRS must NOT connect to databases more frequently than once every 30 minutes.

• Sales department users must be able to use Microsoft Excel to browse tabular data.

###EndCaseStudy###

After you deploy the RegionalSales report, you attempt to configure the subscriptions.

You discover that the subscription creation screen does not display the option to deliver the

report by email.

You need to ensure that subscriptions can be delivered by using email.

What should you do?

You need to implement a solution that addresses the index monitoring requirements

###BeginCaseStudy###

Case Study: 6

Coho Winery

Overview

You are a database developer for a company named Coho Winery. Coho Winery has an

office in London.

Coho Winery has an application that is used to process purchase orders from customers and

retailers in 10 different countries.

The application uses a web front end to process orders from the Internet. The web front end

adds orders to a database named Sales. The Sales database is managed by a server named

Server1.

An empty copy of the Sales database is created on a server named Server2 in the London

office. The database will store sales data for customers in Europe.

A new version of the application is being developed. In the new version, orders will be placed

either by using the existing web front end or by loading an XML file.

Once a week, you receive two files that contain the purchase orders and the order details of

orders fromoffshore facilities.

You run the usp_ImportOders stored procedure and the usp_ImportOrderDetails stored

procedure to copy the offshore facility orders to the Sales database.

The Sales database contains a table named Orders that has more than 20 million rows.

Database Definitions

Database and Tables

The following scripts are used to create the database and its tables:

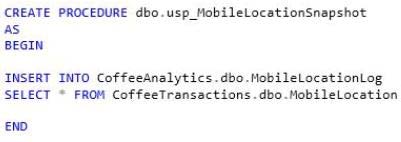

Stored Procedures

The following are the definitions of the stored procedures used in the database:

Indexes

The following indexes are part of the Sales database:

Data Import

The XML files will contain the list of items in each order. Each retailer will have its own

XML schema and will be able to use different types of encoding. Each XML schema will use

a default namespace. The default namespaces are not guaranteed to be unique.

For testing purposes, you receive an XSD file from a customer.

For testing purposes, you also create an XML schema collection named ValidateOrder.

ValidateOrder contains schemas for all of the retailers.

The new version of the application must validate the XML file, parse the data, and store the

parsed data along with the original XML file in the database. The original XML file must be

stored without losing any data.

Reported Issues

Performance Issues

You notice the following for the usp_GetOrdersAndItems stored procedure:

• The stored procedure takes a long time to complete.

• Less than two percent of the rows in the Orders table are retrieved by

usp_GetOrdersAndItems.

• A full table scan runs when the stored procedure executes.

• The amount of disk space used and the amount of time required to insert data are very

high.

You notice that the usp_GetOrdersByProduct stored procedure uses a table scan when the

stored procedure is executed.

Page Split Issues

Updates to the Orders table cause excessive page splits on the IX_Orders_ShipDate index.

Requirements

Site Requirements

Users located in North America must be able to view sales data for customers in North

America and Europe in a single report. The solution must minimize the amount of traffic over

the WAN link between the offices.

Bulk Insert Requirements

The usp_ImportOrderDetails stored procedure takes more than 10 minutes to complete. The

stored procedure runs daily. If the stored procedure fails, you must ensure that the stored

procedure restarts from the last successful set of rows.

Index Monitoring Requirements

The usage of indexes in the Sales database must be monitored continuously. Monitored data

must be maintained if a server restarts. The monitoring solution must minimize the usage of

memory resources and processing resources.

###EndCaseStudy###

You need to implement a solution that addresses the index monitoring requirements.

What should you do?

What should you choose?

###BeginCaseStudy###

Case Study: 4

WingTip Toys

General Background

You are a data architect for WingTip Toys. The company uses SQL Server 2012 Enterprise

edition. SQL Server Analysis Services (SSAS) and SQL Server Reporting Services (SSRS)

are installed on separate servers.

Data Warehouse

The company’s data warehouse initially contained less than 100 MB and 100 million rows of

data from only one data source. It now contains more than 10 TB and 10 billion rows of data,

in 25 tables, from 12 data sources.

The largest table in the data warehouse, the factOrders table, contains 5 TB of data. The

factOrders table contains three date keys: OrderDateKey InvoiceDateKey, and ShipDateKey.

The data warehouse server has 1 TB of RAM. Memory usage is currently at 20 percent.

One billion rows of data are added to the data warehouse each month. New data is copied

each night from the data sources into SQL Server staging tables, and existing records are not

updated. The largest data set is order information, which is loaded in parallel into multiple

staging tables, one for each data source. All the staging tables have the same structure and

belong to the same filegroup as the factOrders table.

The dimCustomers table stores customer information that may change over time.

Data Models

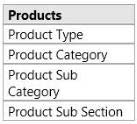

You are developing three SSAS databases, as described in the following table.

Reporting

Business users frequently generate reports in Microsoft Excel by using PowerPivot. The

PowerPivot Management Dashboard does not currently display any usage data.

Several SSRS reports exist that use the data warehouse as a source. The data warehouse

queries are aggregate queries that use the factOrders table and one or more dimension tables.

All SSRS data sources use Integrated Windows authentication.

SSRS displays a security access error message when managers run SSRS reports based on the

Operations database.

Reporting performance has become unacceptably slow.

Business Requirements

Improve the query speed of the SSRS reports.

Allow business users to create reports by using PowerPivot and Power View.

Ensure that all users other than business users can view metadata for the Customers

dimension. Ensure that business users cannot view metadata for the Customers dimension.

Technical Requirements

Modify the tables in the data warehouse to minimize aggregate query processing time.

Minimize disk storage in the data warehouse.

Ensure that all multidimensional models process data as quickly as possible.

Create a fact table named factCustomerContact in the data warehouse to store the contact

date, customer key, and communication type for each instance of customer contact.

Store the history of customer information changes in the dimCustomers table.

Move data from the staging tables into the factOrders table as quickly as possible. When

creating dimensions for the date keys in the factOrders table, minimize storage space

requirements and optimize the cube processing time.

Ensure that queries against the Sales database return the most current data in the data

warehouse.

Ensure that the SSAS model of the Finance database does not page to disk or return a

memory error as the size of the database grows.

Create an SSAS monitoring solution that tracks the following data:

• Queries answered per second

• Queries from cache direct per second

• Queries from file per second.

###EndCaseStudy###

You need to select and configure a tool for the monitoring solution.

What should you choose?

How should you configure the data source for the report?

###BeginCaseStudy###

Case Study: 2

Contoso, Ltd

Background

You are the business intelligence (BI) solutions architect for Contoso Ltd, a multinational

sales company with offices in London, Madrid, Paris, Brisbane, Tokyo, and New York.

Contoso sells office consumable products such as pens, printer ink, and paper.

You produce solutions by using SQL Server 2012 Business Intelligence Edition and

Microsoft SharePoint Server 2010 Enterprise Edition with SP1.

Technical Background

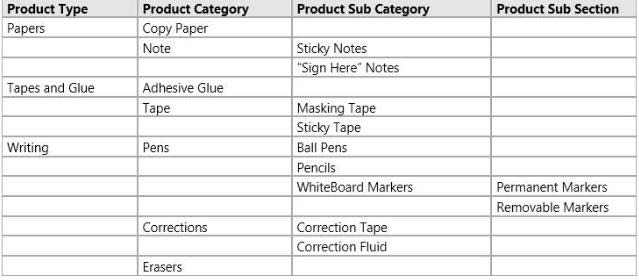

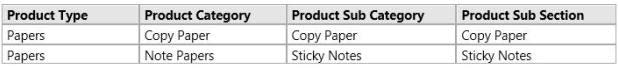

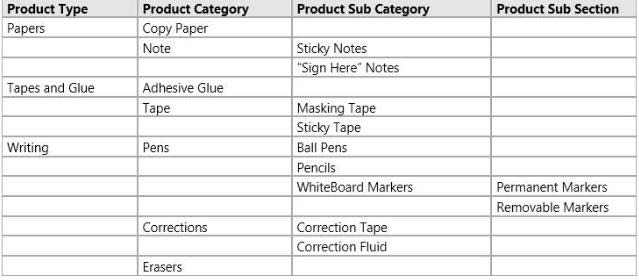

Contoso’s products are categorized by using four levels while some use only two or three

levels. Products are categorized as shown in the following table.

Contoso sells products through mobile sales staff, direct marketing, and its website. Sales

personnel are located in various regions around the world, and each region has a sales

manager who is paid a quarterly bonus based on the total sales in the region during the

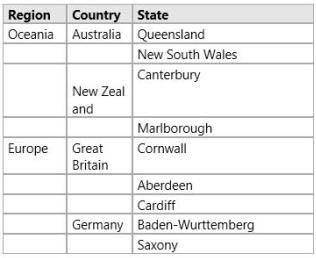

quarter. Regions are categorized as shown in the following table.

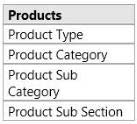

SQL Server Analysis Services (SSAS) is used to host a multidimensional database. The

database contains a single cube named Sales and three database dimensions named Products,

Regions, and Date. A single measure named Sales Total has been defined in the cube. The

data source for the database is a SQL Server data warehouse.

The Products dimension contains a single user-defined hierarchy named Products. To prevent

the display of empty members when users browse the Products dimension, the Extract,

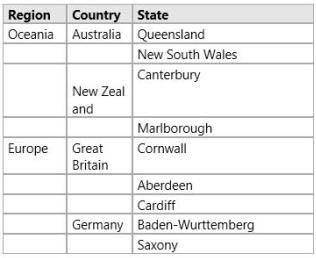

Transform, and Load (ETL) process populates all missing values as shown in the following diagram.

The structure of the Products hierarchy is shown in the following diagram.

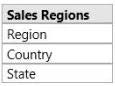

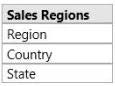

The Regions dimension contains a single user-defined hierarchy named Sales Regions. The

dimension is based on a single dimension table in the data warehouse and the attribute

relationships have not been modified since the dimension was created by using the

Dimension wizard. The structure of the Sales Regions hierarchy is shown in the following diagram.

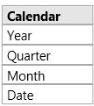

The Date dimension contains a single user-defined hierarchy named Calendar. The structure

of the Calendar hierarchyis shown in the following diagram.

A role named UserRegions has been created in the SSAS database that will be used to filter

members in the Regions dimension based on the authenticated user.

Administrative staff from around the world will produce sales reports with Microsoft Excel

2010 based on the Sales cube.

Developers will produce reports with SQL Server Reporting Services (SSRS) based on the

Sales cube and the reports will be delivered to users through report subscriptions and a web

browser-All users log on to an Active Directory Domain Services (AD DS) domain named

contoso.com.

All client computers and servers are joined to the contoso.com domain.

Business Requirements

The BI system must meet the following reporting requirements:

• Display all sales figures in euro currency, regardless of the client’s reporting location

• Include a new measure named AD Sales that calculates average daily sales for a

selected month

• Support near real-time reporting while maintaining good performance for

multidimensional queries

• Support reports that show currency exchange rates

• Deliver executive reports that are parameterized and rendered from report snapshots

In addition, cube objects must use terms familiar to users from around the world. For

example, in the SalesRegions hierarchy, users from Great Britain must see the State level

presented as County when browsing the Sales cube.

The Sales cube must support a new measure group named Sales Planning. The measure group

must consist of a single measure named Sales Plan that enables the management team to use

Excel 2010 to enter sales plans for future monitoring.

The BI system must meet the following technical requirements:

Architecture requirements

o The system must use separate servers for each of the following components:

SQL Server Database Engine

SQL Server Integration Services

SQL Server Analysis Services in multidimensional mode SharePoint Server with

the Reporting Services Add-in

o All servers must be installed using U.S. regional settings.

o The system must source currency exchange rate data from a database hosted in

Microsoft Azure SQL Database.

Security requirements

o When possible, the system must use Windows authentication for all database

connections.

o The system must prevent users from querying data from outside of their region.

o The system must allow certain users to query data from multiple regions.

Development requirements

o When browsing the Products hierarchy, repeating values for different levels of a given

drill-path must be avoided. For example, Papers -> Copy Paper -> Copy Paper ->

Copy Paper should appear simply as Papers -> Copy Paper.

o The system must support report snapshots. The default maximum number of retained

snapshots must not exceed five.

###EndCaseStudy###

You need to develop an SSRS report that retrieves currency exchange rate data.

How should you configure the data source for the report?

Which statements should you execute?

###BeginCaseStudy###

Case Study: 6

Coho Winery

Overview

You are a database developer for a company named Coho Winery. Coho Winery has an

office in London.

Coho Winery has an application that is used to process purchase orders from customers and

retailers in 10 different countries.

The application uses a web front end to process orders from the Internet. The web front end

adds orders to a database named Sales. The Sales database is managed by a server named

Server1.

An empty copy of the Sales database is created on a server named Server2 in the London

office. The database will store sales data for customers in Europe.

A new version of the application is being developed. In the new version, orders will be placed

either by using the existing web front end or by loading an XML file.

Once a week, you receive two files that contain the purchase orders and the order details of

orders fromoffshore facilities.

You run the usp_ImportOders stored procedure and the usp_ImportOrderDetails stored

procedure to copy the offshore facility orders to the Sales database.

The Sales database contains a table named Orders that has more than 20 million rows.

Database Definitions

Database and Tables

The following scripts are used to create the database and its tables:

Stored Procedures

The following are the definitions of the stored procedures used in the database:

Indexes

The following indexes are part of the Sales database:

Data Import

The XML files will contain the list of items in each order. Each retailer will have its own

XML schema and will be able to use different types of encoding. Each XML schema will use

a default namespace. The default namespaces are not guaranteed to be unique.

For testing purposes, you receive an XSD file from a customer.

For testing purposes, you also create an XML schema collection named ValidateOrder.

ValidateOrder contains schemas for all of the retailers.

The new version of the application must validate the XML file, parse the data, and store the

parsed data along with the original XML file in the database. The original XML file must be

stored without losing any data.

Reported Issues

Performance Issues

You notice the following for the usp_GetOrdersAndItems stored procedure:

• The stored procedure takes a long time to complete.

• Less than two percent of the rows in the Orders table are retrieved by

usp_GetOrdersAndItems.

• A full table scan runs when the stored procedure executes.

• The amount of disk space used and the amount of time required to insert data are very

high.

You notice that the usp_GetOrdersByProduct stored procedure uses a table scan when the

stored procedure is executed.

Page Split Issues

Updates to the Orders table cause excessive page splits on the IX_Orders_ShipDate index.

Requirements

Site Requirements

Users located in North America must be able to view sales data for customers in North

America and Europe in a single report. The solution must minimize the amount of traffic over

the WAN link between the offices.

Bulk Insert Requirements

The usp_ImportOrderDetails stored procedure takes more than 10 minutes to complete. The

stored procedure runs daily. If the stored procedure fails, you must ensure that the stored

procedure restarts from the last successful set of rows.

Index Monitoring Requirements

The usage of indexes in the Sales database must be monitored continuously. Monitored data

must be maintained if a server restarts. The monitoring solution must minimize the usage of

memory resources and processing resources.

###EndCaseStudy###

You need to implement a solution that solves the performance issues of

usp_GetOrdersAndItems.

Which statements should you execute?

Which model type should you select?

###BeginCaseStudy###

Case Study: 4

WingTip Toys

General Background

You are a data architect for WingTip Toys. The company uses SQL Server 2012 Enterprise

edition. SQL Server Analysis Services (SSAS) and SQL Server Reporting Services (SSRS)

are installed on separate servers.

Data Warehouse

The company’s data warehouse initially contained less than 100 MB and 100 million rows of

data from only one data source. It now contains more than 10 TB and 10 billion rows of data,

in 25 tables, from 12 data sources.

The largest table in the data warehouse, the factOrders table, contains 5 TB of data. The

factOrders table contains three date keys: OrderDateKey InvoiceDateKey, and ShipDateKey.

The data warehouse server has 1 TB of RAM. Memory usage is currently at 20 percent.

One billion rows of data are added to the data warehouse each month. New data is copied

each night from the data sources into SQL Server staging tables, and existing records are not

updated. The largest data set is order information, which is loaded in parallel into multiple

staging tables, one for each data source. All the staging tables have the same structure and

belong to the same filegroup as the factOrders table.

The dimCustomers table stores customer information that may change over time.

Data Models

You are developing three SSAS databases, as described in the following table.

Reporting

Business users frequently generate reports in Microsoft Excel by using PowerPivot. The

PowerPivot Management Dashboard does not currently display any usage data.

Several SSRS reports exist that use the data warehouse as a source. The data warehouse

queries are aggregate queries that use the factOrders table and one or more dimension tables.

All SSRS data sources use Integrated Windows authentication.

SSRS displays a security access error message when managers run SSRS reports based on the

Operations database.

Reporting performance has become unacceptably slow.

Business Requirements

Improve the query speed of the SSRS reports.

Allow business users to create reports by using PowerPivot and Power View.

Ensure that all users other than business users can view metadata for the Customers

dimension. Ensure that business users cannot view metadata for the Customers dimension.

Technical Requirements

Modify the tables in the data warehouse to minimize aggregate query processing time.

Minimize disk storage in the data warehouse.

Ensure that all multidimensional models process data as quickly as possible.

Create a fact table named factCustomerContact in the data warehouse to store the contact

date, customer key, and communication type for each instance of customer contact.

Store the history of customer information changes in the dimCustomers table.

Move data from the staging tables into the factOrders table as quickly as possible. When

creating dimensions for the date keys in the factOrders table, minimize storage space

requirements and optimize the cube processing time.

Ensure that queries against the Sales database return the most current data in the data

warehouse.

Ensure that the SSAS model of the Finance database does not page to disk or return a

memory error as the size of the database grows.

Create an SSAS monitoring solution that tracks the following data:

• Queries answered per second

• Queries from cache direct per second

• Queries from file per second.

###EndCaseStudy###

You need to select the appropriate model type for the Finance database.

Which model type should you select?

Which aggregation function should you use?

###BeginCaseStudy###

Case Study: 2

Contoso, Ltd

Background

You are the business intelligence (BI) solutions architect for Contoso Ltd, a multinational

sales company with offices in London, Madrid, Paris, Brisbane, Tokyo, and New York.

Contoso sells office consumable products such as pens, printer ink, and paper.

You produce solutions by using SQL Server 2012 Business Intelligence Edition and

Microsoft SharePoint Server 2010 Enterprise Edition with SP1.

Technical Background

Contoso’s products are categorized by using four levels while some use only two or three

levels. Products are categorized as shown in the following table.

Contoso sells products through mobile sales staff, direct marketing, and its website. Sales

personnel are located in various regions around the world, and each region has a sales

manager who is paid a quarterly bonus based on the total sales in the region during the

quarter. Regions are categorized as shown in the following table.

SQL Server Analysis Services (SSAS) is used to host a multidimensional database. The

database contains a single cube named Sales and three database dimensions named Products,

Regions, and Date. A single measure named Sales Total has been defined in the cube. The

data source for the database is a SQL Server data warehouse.

The Products dimension contains a single user-defined hierarchy named Products. To prevent

the display of empty members when users browse the Products dimension, the Extract,

Transform, and Load (ETL) process populates all missing values as shown in the following diagram.

The structure of the Products hierarchy is shown in the following diagram.

The Regions dimension contains a single user-defined hierarchy named Sales Regions. The

dimension is based on a single dimension table in the data warehouse and the attribute

relationships have not been modified since the dimension was created by using the

Dimension wizard. The structure of the Sales Regions hierarchy is shown in the following diagram.

The Date dimension contains a single user-defined hierarchy named Calendar. The structure

of the Calendar hierarchyis shown in the following diagram.

A role named UserRegions has been created in the SSAS database that will be used to filter

members in the Regions dimension based on the authenticated user.

Administrative staff from around the world will produce sales reports with Microsoft Excel

2010 based on the Sales cube.

Developers will produce reports with SQL Server Reporting Services (SSRS) based on the

Sales cube and the reports will be delivered to users through report subscriptions and a web

browser-All users log on to an Active Directory Domain Services (AD DS) domain named

contoso.com.

All client computers and servers are joined to the contoso.com domain.

Business Requirements

The BI system must meet the following reporting requirements:

• Display all sales figures in euro currency, regardless of the client’s reporting location

• Include a new measure named AD Sales that calculates average daily sales for a

selected month

• Support near real-time reporting while maintaining good performance for

multidimensional queries

• Support reports that show currency exchange rates

• Deliver executive reports that are parameterized and rendered from report snapshots

In addition, cube objects must use terms familiar to users from around the world. For

example, in the SalesRegions hierarchy, users from Great Britain must see the State level

presented as County when browsing the Sales cube.

The Sales cube must support a new measure group named Sales Planning. The measure group

must consist of a single measure named Sales Plan that enables the management team to use

Excel 2010 to enter sales plans for future monitoring.

The BI system must meet the following technical requirements:

Architecture requirements

o The system must use separate servers for each of the following components:

SQL Server Database Engine

SQL Server Integration Services

SQL Server Analysis Services in multidimensional mode SharePoint Server with

the Reporting Services Add-in

o All servers must be installed using U.S. regional settings.

o The system must source currency exchange rate data from a database hosted in

Microsoft Azure SQL Database.

Security requirements

o When possible, the system must use Windows authentication for all database

connections.

o The system must prevent users from querying data from outside of their region.

o The system must allow certain users to query data from multiple regions.

Development requirements

o When browsing the Products hierarchy, repeating values for different levels of a given

drill-path must be avoided. For example, Papers -> Copy Paper -> Copy Paper ->

Copy Paper should appear simply as Papers -> Copy Paper.

o The system must support report snapshots. The default maximum number of retained

snapshots must not exceed five.

###EndCaseStudy###

You need to create the AD Sales measure.

Which aggregation function should you use?

You need to monitor the health of your tables and indexes in order to implement the required index maintenance

###BeginCaseStudy###

Case Study: 7

Fourth Coffee

Background

Corporate Information

Fourth Coffee is global restaurant chain. There are more than 5,000 locations worldwide.

Physical Locations

Currently a server at each location hosts a SQL Server 2012 instance. Each instance contains

a database called StoreTransactions that stores all transactions from point of sale and uploads

summary batches nightly.

Each server belongs to the COFFECORP domain. Local computer accounts access the

StoreTransactions database at each store using sysadmin and datareaderwriter roles.

Planned changes

Fourth Coffee has three major initiatives:

• The FT department must consolidate the point of sales database infrastructure.

• The marketing department plans to launch a mobile application for micropayments.

• The finance department wants to deploy an internal tool that will help detect fraud.

Initially, the mobile application will allow customers to make micropayments to buy coffee

and other items on the company web site. These micropayments may be sent as gifts to other

users and redeemed within an hour of ownership transfer. Later versions will generate

profiles based on customer activity that will push texts and ads generated by an analytics

application.

When the consolidation is finished and the mobile application is in production, the

micropayments and point of sale transactions will use the same database.

Existing Environment

Existing Application Environment

Some stores have been using several pilot versions of the micropayment application. Each

version currently is in a database that is independent from the point of sales systems. Some

versions have been used in field tests at local stores, and others are hosted at corporate

servers. All pilot versions were developed by using SQL Server 2012.

Existing Support Infrastructure

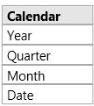

The proposed database for consolidating micropayments and transactions is called

CoffeeTransactions. The database is hosted on a SQL Server 2014 Enterprise Edition

instance and has the following file structures:

Business Requirements

General Application Solution Requirements

The database infrastructure must support a phased global rollout of the micropayment

application and consolidation.

The consolidated micropayment and point of sales database will be into a CoffeeTransactions

database. The infrastructure also will include a new CoffeeAnalytics database for reporting

on content from CoffeeTransactions.

Mobile applications will interact most frequently with the micropayment database for the

following activities:

• Retrieving the current status of a micropayment;

• Modifying the status of the current micropayment; and

• Canceling the micropayment.

The mobile application will need to meet the following requirements:

• Communicate with web services that assign a new user to a micropayment by using a

stored procedure named usp_AssignUser.

• Update the location of the user by using a stored procedure named

usp_AddMobileLocation.

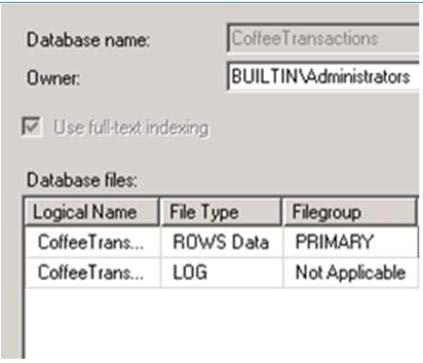

The fraud detection service will need to meet the following requirements:

• Query the current open micropayments for users who own multiple micropayments by

using a stored procedure named usp.LookupConcurrentUsers.

• Persist the current user locations by using a stored procedure named

usp_Mobilel_ocationSnapshot.

• Look at the status of micropayments and mark micropayments for internal

investigations.

• Move micropayments to dbo.POSException table by using a stored procedure named

ups_DetectSuspiciousActivity.

• Detect micropayments that are flagged with a StatusId value that is greater than 3 and

that occurred within the last minute.

The CoffeeAnalytics database will combine imports of the POSTransaction and

MobileLocation tables to create a UserActivity table for reports on the trends in activity.

Queries against the UserActivity table will include aggregated calculations on all columns

that are not used in filters or groupings.

Micropayments need to be updated and queried for only a week after their creation by the

mobile application or fraud detection services.

Performance

The most critical performance requirement is keeping the response time for any queries of the

POSTransaction table predictable and fast.

Web service queries will take a higher priority in performance tuning decisions over the fraud

detection agent queries.

Scalability

Queries of the user of a micropayment cannot return while the micropayment is being

updated, but can show different users during different stages of the transaction.

The fraud detection service frequently will run queries over the micropayments that occur

over different time periods that range between 30 seconds and ten minutes.

The POSTransaction table must have its structure optimized for hundreds of thousands of

active micropayments that are updated frequently.

All changes to the POSTransaction table will require testing in order to confirm the expected

throughput that will support the first year’s performance requirements.

Updates of a user’s location can tolerate some data loss.

Initial testing has determined that the POSTransaction and POSException tables will be

migrated to an in-memory optimized table.

Availability

In order to minimize disruption at local stores during consolidation, nightly processes will

restore the databases to a staging server at corporate headquarters.

Technical Requirements

Security

The sensitive nature of financial transactions in the store databases requires certification of

the COFFECORP\Auditors group at corporate that will perform audits of the data. Members

of the COFFECORP\Auditors group cannot have sysadmin or datawriter access to the

database.

Compliance requires that the data stewards have access to any restored StoreTransactions

database without changing any security settings at a database level.

Nightly batch processes are run by the services account in the COFFECORP\StoreAgent

group and need to be able to restore and verify the schema of the store databases match.

No Windows group should have more access to store databases than is necessary.

Maintainability

You need to anticipate when POSTransaction table will need index maintenance.

When the daily maintenance finishes, micropayments that are one week old must be available

for queries in UserActivity table but will be queried most frequently within their first week

and will require support for in-memory queries for data within first week.

The maintenance of the UserActivity table must allow frequent maintenance on the day’s

most recent activities with minimal impact on the use of disk space and the resources

available to queries. The processes that add data to the UserActivity table must be able to

update data from any time period, even while maintenance is running.

The index maintenance strategy for the UserActivity table must provide the optimal structure

for both maintainability and query performance.

All micropayments queries must include the most permissive isolation level available for the

maximum throughput.

In the event of unexpected results, all stored procedures must provide error messages in text

message to the calling web service.

Any modifications to stored procedures will require the minimal amount of schema changes

necessary to increase the performance.

Performance

Stress testing of the mobile application on the proposed CoffeeTransactions database

uncovered performance bottlenecks. The sys.dm_os_wait_stats Dynamic Management View

(DMV) shows high wait_time values for WRTTELOG and PAGEIOLATCHJJP wait types

when updating the MobileLocation table.

Updates to the MobileLocation table must have minimal impact on physical resources.

Supporting Infrastructure

The stored procedure usp_LookupConcurrentUsers has the current implementation:

The current stored procedure for persisting a user location is defined in the following code:

The current stored procedure for managing micropayments needing investigation is defined

in the following code:

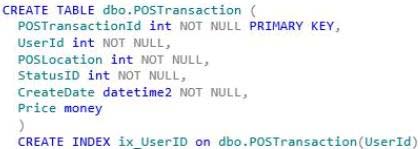

The current table, before implementing any performance enhancements, is defined as follows:

###EndCaseStudy###

You need to monitor the health of your tables and indexes in order to implement the required

index maintenance strategy.

What should you do?