You need to implement the aggregation designs for the cube

###BeginCaseStudy###

Case Study: 3

Data Architect

General Background

You are a Data Architect for a company that uses SQL Server 2012 Enterprise edition.

You have been tasked with designing a data warehouse that uses the company’s financial

database as the data source. From the data warehouse, you will develop a cube to simplify the

creation of accurate financial reports and related data analysis.

Background

You will utilize the following three servers:

• ServerA runs SQL Server Database Engine. ServerA is a production

server and also hosts the financial database.

• ServerB runs SQL Server Database Engine, SQL Server Analysis

Services (SSAS) in multidimensional mode, SQL Server Integration Services

(SSIS), and SQL Server Reporting Services (SSRS).

• ServerC runs SSAS in multidimensional mode.

• The financial database is used by a third-party application and the table

structures cannot be modified.

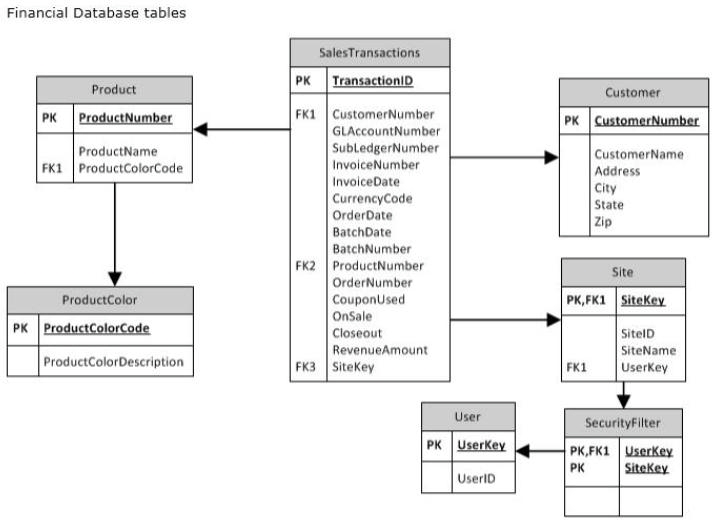

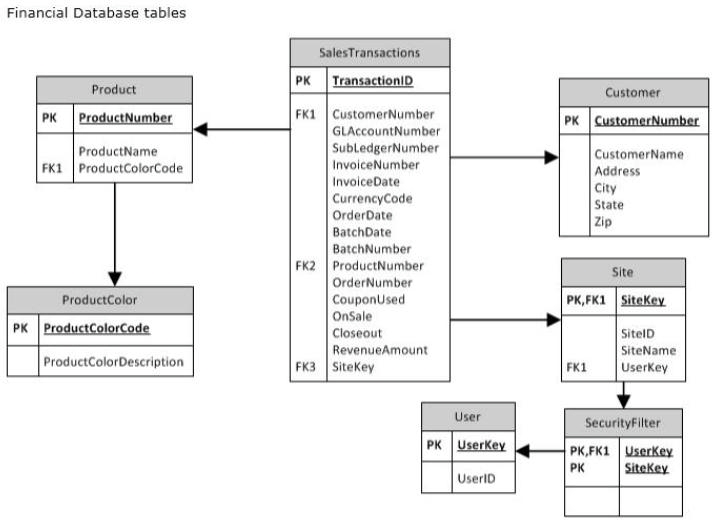

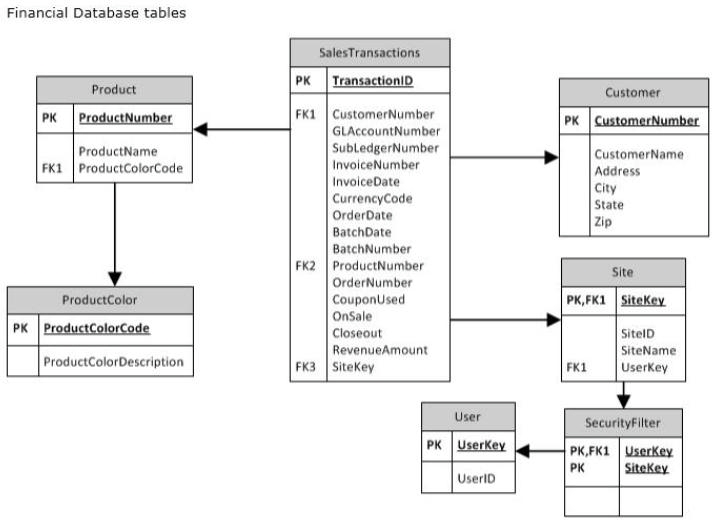

The relevant tables in the financial database are shown in the exhibit. (Click the Exhibit button.)

The SalesTransactions table is 500 GB and is anticipated to grow to 2 TB. The table is

partitioned by month. It contains only the last five years of financial data. The CouponUsed,

OnSale, and Closeout columns contain only the values Yes or No. Each of the other tables is

less than 10 MB and has only one partition.

The SecurityFilter table specifies the sites to which each user has access.

Business Requirements

The extract, transform, load (ETL) process that updates the data warehouse must run daily

between 8:00 P.M. and 5:00 A.M. so that it doesn’t impact the performance of ServerA

during business hours. The cube data must be available by 8:00 A.M.

The cube must meet the following business requirements:

• Ensure that reports display the most current information available.

• Allow fast access to support ad-hoc reports and data analysis.

Business Analysts will access the data warehouse tables directly, and will access the cube by

using SSRS, Microsoft Excel, and Microsoft SharePoint Server 2010 PerformancePoint

Services. These tools will access only the cube and not the data warehouse.

Technical Requirements

SSIS solutions must be deployed by using the project deployment model.

You must develop the data warehouse and store the cube on ServerB. When the number of

concurrent SSAS users on ServerB reaches a specific number, you must scale out SSAS to

ServerC and meet following requirements:

• Maintain copies of the cube on ServerB and ServerC.

• Ensure that the cube is always available on both servers.

• Minimize query response time.

The cube must meet the following technical requirements:

• The cube must be processed by using an SSIS package.

• The cube must contain the prior day’s data up to 8:00 P.M. but does not

need to contain same-day data.

• The cube must include aggregation designs when it is initially

deployed.

• A product dimension must be added to the cube. It will contain a

hierarchy comprised of product name and product color.

Because of the large size of the SalesTransactions table, the cube must store only

aggregations—the data warehouse must store the detailed data. Both the data warehouse and

the cube must minimize disk space usage.

As the cube size increases, you must plan to scale out to additional servers to minimize

processing time.

The data warehouse must use a star schema design. The table design must be as denormalized

as possible. The history of changes to the Customer table must be tracked in the data

warehouse. The cube must use the data warehouse as its only data source.

Security settings on the data warehouse and the cube must ensure that queries against the

SalesTransactions table return only records from the sites to which the current user has

access.

The ETL process must consist of multiple SSIS packages developed in a single project by

using the least amount of effort. The SSIS packages must use a database connection string

that is set at execution time to connect to the financial database. All data in the data

warehouse must be loaded by the SSIS packages.

You must create a Package Activity report that meets the following requirements:

• Track SSIS package execution data (including package name, status,

start time, end time, duration, and rows processed).

• Use the least amount of development effort.

###EndCaseStudy###

You need to implement the aggregation designs for the cube.

What should you do?

You need to ensure that developers can create Multidimensional Expressions (MDX) calculations that use the sto

You develop a SQL Server Analysis Services (SSAS) stored procedure.

You need to ensure that developers can create Multidimensional Expressions (MDX)

calculations that use the stored procedure.

What should you do?

You need to implement a solution that meets the job application requirements

###BeginCaseStudy###

Case Study: 5

Litware, Inc

Overview

General Overview

You are a database developer for a company named Litware, Inc. Litware has a main office in

Miami.

Litware has a job posting web application named WebApp1. WebApp1 uses a database

named DB1. DB1 is hosted on a server named Server1. The database design of DB1 is shown

in the exhibit. (Click the Exhibit button.)

WebApp1 allows a user to log on as a job poster or a job seeker. Candidates can search for

job openings based on keywords, apply to an opening, view their application, and load their

resume in Microsoft Word format. Companies can add a job opening, view the list of

candidates who applied to an opening, and mark an application as denied.

Users and Roles

DB1 has five database users named Company, CompanyWeb, Candidate, CandidateWeb, and

Administrator.

DB1 has three user-defined database roles. The roles are configured as shown in the

following table.

Keyword Search

The keyword searches for the job openings are performed by using the following stored

procedure named usp_GetOpenings:

Opening Update

Updates to the Openings table are performed by using the following stored procedure named

usp_UpdateOpening:

Problems and Reported Issues

Concurrency Problems

You discover that deadlocks frequently occur.

You identify that a stored procedure named usp_AcceptCandidate and a stored procedure

named usp_UpdateCandidate generate deadlocks. The following is the code for

usp_AcceptCandidate:

Salary Query Issues

Users report that when they perform a search for job openings without specifying a minimum

salary, only job openings that specify a minimum salary are displayed.

Log File Growth Issues

The current log file for DB1 grows constantly. The log file fails to shrink even when the daily

SQL Server Agent Shrink Database task runs.

Performance Issues

You discover that a stored procedure named usp_ExportOpenings takes a long time to run

and executes a table scan when it runs.

You also discover that the usp_GetOpenings stored procedure takes a long time to run and

that the non-clustered index on the Description column is not being used.

Page Split Issues

On DB1, many page splits per second spike every few minutes.

Requirements

Security and Application Requirements

Litware identifies the following security and application requirements:

• Only the Administrator, Company, and CompanyWeb database users must be able to

execute the usp_UpdateOpening stored procedure.

• Changes made to the database must not affect WebApp1.

Locking Requirements

Litware identifies the following locking requirements:

• The usp_GetOpenings stored procedure must not be blocked by the

usp_UpdateOpening stored procedure.

• If a row is locked in the Openings table, usp_GetOpenings must retrieve the latest

version of the row, even if the row was not committed yet.

Integration Requirements

Litware exports its job openings to an external company as XML data. The XML data uses

the following format:

A stored procedure named usp_ExportOpenings will be used to generate the XML data. The

following is the code for usp_ExportOpenings:

The stored procedure will be executed by a SQL Server Integration Services (SSIS) package

named Package1.

The XML data will be written to a secured folder named Folder1. Only a dedicated Active

Directory account named Account1 is assigned the permissions to read from or write to

Folder1.

Refactoring Requirements

Litware identifies the following refactoring requirements:

• New code must be written by reusing the following query:

• The results from the query must be able to be joined to other queries.

Upload Requirements

Litware requires users to upload their job experience in a Word file by using WebApp1.

WebApp1 will send the Word file to DB1 as a stream of bytes. DB1 will then convert the

Word file to text before the contents of the Word file is saved to the Candidates table.

A database developer creates an assembly named Conversions that contains the following:

• A class named Convert in the SqlConversions namespace

• A method named ConvertToText in the Convert class that converts Word files to text

The ConvertToText method accepts a stream of bytes and returns text. The method is used in

the following stored procedure:

Job Application Requirements

A candidate can only apply to each job opening once.

Data Recovery Requirements

All changes to the database are performed by using stored procedures. WebApp1 generates a

unique transaction ID for every stored procedure call that the application makes to the

database.

If a server fails, you must be able to restore data to a specified transaction.

###EndCaseStudy###

You need to implement a solution that meets the job application requirements.

What should you do?

You need to slice data by the CouponUsed, OnSale, and Closeout columns

###BeginCaseStudy###

Case Study: 3

Data Architect

General Background

You are a Data Architect for a company that uses SQL Server 2012 Enterprise edition.

You have been tasked with designing a data warehouse that uses the company’s financial

database as the data source. From the data warehouse, you will develop a cube to simplify the

creation of accurate financial reports and related data analysis.

Background

You will utilize the following three servers:

• ServerA runs SQL Server Database Engine. ServerA is a production

server and also hosts the financial database.

• ServerB runs SQL Server Database Engine, SQL Server Analysis

Services (SSAS) in multidimensional mode, SQL Server Integration Services

(SSIS), and SQL Server Reporting Services (SSRS).

• ServerC runs SSAS in multidimensional mode.

• The financial database is used by a third-party application and the table

structures cannot be modified.

The relevant tables in the financial database are shown in the exhibit. (Click the Exhibit button.)

The SalesTransactions table is 500 GB and is anticipated to grow to 2 TB. The table is

partitioned by month. It contains only the last five years of financial data. The CouponUsed,

OnSale, and Closeout columns contain only the values Yes or No. Each of the other tables is

less than 10 MB and has only one partition.

The SecurityFilter table specifies the sites to which each user has access.

Business Requirements

The extract, transform, load (ETL) process that updates the data warehouse must run daily

between 8:00 P.M. and 5:00 A.M. so that it doesn’t impact the performance of ServerA

during business hours. The cube data must be available by 8:00 A.M.

The cube must meet the following business requirements:

• Ensure that reports display the most current information available.

• Allow fast access to support ad-hoc reports and data analysis.

Business Analysts will access the data warehouse tables directly, and will access the cube by

using SSRS, Microsoft Excel, and Microsoft SharePoint Server 2010 PerformancePoint

Services. These tools will access only the cube and not the data warehouse.

Technical Requirements

SSIS solutions must be deployed by using the project deployment model.

You must develop the data warehouse and store the cube on ServerB. When the number of

concurrent SSAS users on ServerB reaches a specific number, you must scale out SSAS to

ServerC and meet following requirements:

• Maintain copies of the cube on ServerB and ServerC.

• Ensure that the cube is always available on both servers.

• Minimize query response time.

The cube must meet the following technical requirements:

• The cube must be processed by using an SSIS package.

• The cube must contain the prior day’s data up to 8:00 P.M. but does not

need to contain same-day data.

• The cube must include aggregation designs when it is initially

deployed.

• A product dimension must be added to the cube. It will contain a

hierarchy comprised of product name and product color.

Because of the large size of the SalesTransactions table, the cube must store only

aggregations—the data warehouse must store the detailed data. Both the data warehouse and

the cube must minimize disk space usage.

As the cube size increases, you must plan to scale out to additional servers to minimize

processing time.

The data warehouse must use a star schema design. The table design must be as denormalized

as possible. The history of changes to the Customer table must be tracked in the data

warehouse. The cube must use the data warehouse as its only data source.

Security settings on the data warehouse and the cube must ensure that queries against the

SalesTransactions table return only records from the sites to which the current user has

access.

The ETL process must consist of multiple SSIS packages developed in a single project by

using the least amount of effort. The SSIS packages must use a database connection string

that is set at execution time to connect to the financial database. All data in the data

warehouse must be loaded by the SSIS packages.

You must create a Package Activity report that meets the following requirements:

• Track SSIS package execution data (including package name, status,

start time, end time, duration, and rows processed).

• Use the least amount of development effort.

###EndCaseStudy###

You need to slice data by the CouponUsed, OnSale, and Closeout columns.

What should you do?

Which Business Intelligence Wizard enhancements should you use?

HOTSPOT

A sales cube contains two years of data.

The sales team must see year-over-year (YOY) and month-over-month (MOM) sales

metrics.

You need to modify the cube to support the sales team’s requirements.

Which Business Intelligence Wizard enhancements should you use? (To answer, configure

the appropriate option or options in the dialog box in the answer area.)

Which code segments should you insert at line 03 and line 05?

DRAG DROP

You have a cube named Cube1 that contains the sales data for your company.

You plan to build a report based on the cube.

You need to write an MDX expression that returns the total sales from the first month of the

2009 fiscal year and the total sales from the same period of the 2008 fiscal year.

Which code segments should you insert at line 03 and line 05? To answer, drag the

appropriate code segments to the correct lines. Each code segments may be used once,

more than once, or not at all. You may need to drag the split bar between panes or scroll to

view content.

Which statement should you execute on DB1?

###BeginCaseStudy###

Case Study: 5

Litware, Inc

Overview

General Overview

You are a database developer for a company named Litware, Inc. Litware has a main office in

Miami.

Litware has a job posting web application named WebApp1. WebApp1 uses a database

named DB1. DB1 is hosted on a server named Server1. The database design of DB1 is shown

in the exhibit. (Click the Exhibit button.)

WebApp1 allows a user to log on as a job poster or a job seeker. Candidates can search for

job openings based on keywords, apply to an opening, view their application, and load their

resume in Microsoft Word format. Companies can add a job opening, view the list of

candidates who applied to an opening, and mark an application as denied.

Users and Roles

DB1 has five database users named Company, CompanyWeb, Candidate, CandidateWeb, and

Administrator.

DB1 has three user-defined database roles. The roles are configured as shown in the

following table.

Keyword Search

The keyword searches for the job openings are performed by using the following stored

procedure named usp_GetOpenings:

Opening Update

Updates to the Openings table are performed by using the following stored procedure named

usp_UpdateOpening:

Problems and Reported Issues

Concurrency Problems

You discover that deadlocks frequently occur.

You identify that a stored procedure named usp_AcceptCandidate and a stored procedure

named usp_UpdateCandidate generate deadlocks. The following is the code for

usp_AcceptCandidate:

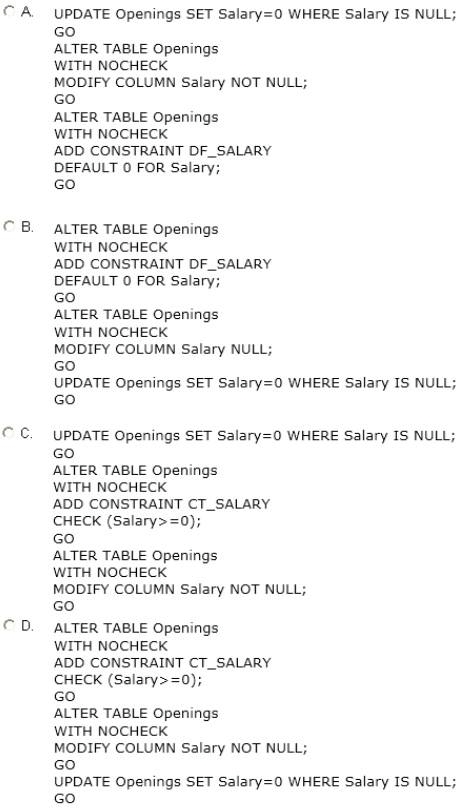

Salary Query Issues

Users report that when they perform a search for job openings without specifying a minimum

salary, only job openings that specify a minimum salary are displayed.

Log File Growth Issues

The current log file for DB1 grows constantly. The log file fails to shrink even when the daily

SQL Server Agent Shrink Database task runs.

Performance Issues

You discover that a stored procedure named usp_ExportOpenings takes a long time to run

and executes a table scan when it runs.

You also discover that the usp_GetOpenings stored procedure takes a long time to run and

that the non-clustered index on the Description column is not being used.

Page Split Issues

On DB1, many page splits per second spike every few minutes.

Requirements

Security and Application Requirements

Litware identifies the following security and application requirements:

• Only the Administrator, Company, and CompanyWeb database users must be able to

execute the usp_UpdateOpening stored procedure.

• Changes made to the database must not affect WebApp1.

Locking Requirements

Litware identifies the following locking requirements:

• The usp_GetOpenings stored procedure must not be blocked by the

usp_UpdateOpening stored procedure.

• If a row is locked in the Openings table, usp_GetOpenings must retrieve the latest

version of the row, even if the row was not committed yet.

Integration Requirements

Litware exports its job openings to an external company as XML data. The XML data uses

the following format:

A stored procedure named usp_ExportOpenings will be used to generate the XML data. The

following is the code for usp_ExportOpenings:

The stored procedure will be executed by a SQL Server Integration Services (SSIS) package

named Package1.

The XML data will be written to a secured folder named Folder1. Only a dedicated Active

Directory account named Account1 is assigned the permissions to read from or write to

Folder1.

Refactoring Requirements

Litware identifies the following refactoring requirements:

• New code must be written by reusing the following query:

• The results from the query must be able to be joined to other queries.

Upload Requirements

Litware requires users to upload their job experience in a Word file by using WebApp1.

WebApp1 will send the Word file to DB1 as a stream of bytes. DB1 will then convert the

Word file to text before the contents of the Word file is saved to the Candidates table.

A database developer creates an assembly named Conversions that contains the following:

• A class named Convert in the SqlConversions namespace

• A method named ConvertToText in the Convert class that converts Word files to text

The ConvertToText method accepts a stream of bytes and returns text. The method is used in

the following stored procedure:

Job Application Requirements

A candidate can only apply to each job opening once.

Data Recovery Requirements

All changes to the database are performed by using stored procedures. WebApp1 generates a

unique transaction ID for every stored procedure call that the application makes to the

database.

If a server fails, you must be able to restore data to a specified transaction.

###EndCaseStudy###

You need to implement a solution that resolves the salary query issue.

Which statement should you execute on DB1?

You need to design a cube partitioning strategy to be implemented as the cube size increases

###BeginCaseStudy###

Case Study: 3

Data Architect

General Background

You are a Data Architect for a company that uses SQL Server 2012 Enterprise edition.

You have been tasked with designing a data warehouse that uses the company’s financial

database as the data source. From the data warehouse, you will develop a cube to simplify the

creation of accurate financial reports and related data analysis.

Background

You will utilize the following three servers:

• ServerA runs SQL Server Database Engine. ServerA is a production

server and also hosts the financial database.

• ServerB runs SQL Server Database Engine, SQL Server Analysis

Services (SSAS) in multidimensional mode, SQL Server Integration Services

(SSIS), and SQL Server Reporting Services (SSRS).

• ServerC runs SSAS in multidimensional mode.

• The financial database is used by a third-party application and the table

structures cannot be modified.

The relevant tables in the financial database are shown in the exhibit. (Click the Exhibit button.)

The SalesTransactions table is 500 GB and is anticipated to grow to 2 TB. The table is

partitioned by month. It contains only the last five years of financial data. The CouponUsed,

OnSale, and Closeout columns contain only the values Yes or No. Each of the other tables is

less than 10 MB and has only one partition.

The SecurityFilter table specifies the sites to which each user has access.

Business Requirements

The extract, transform, load (ETL) process that updates the data warehouse must run daily

between 8:00 P.M. and 5:00 A.M. so that it doesn’t impact the performance of ServerA

during business hours. The cube data must be available by 8:00 A.M.

The cube must meet the following business requirements:

• Ensure that reports display the most current information available.

• Allow fast access to support ad-hoc reports and data analysis.

Business Analysts will access the data warehouse tables directly, and will access the cube by

using SSRS, Microsoft Excel, and Microsoft SharePoint Server 2010 PerformancePoint

Services. These tools will access only the cube and not the data warehouse.

Technical Requirements

SSIS solutions must be deployed by using the project deployment model.

You must develop the data warehouse and store the cube on ServerB. When the number of

concurrent SSAS users on ServerB reaches a specific number, you must scale out SSAS to

ServerC and meet following requirements:

• Maintain copies of the cube on ServerB and ServerC.

• Ensure that the cube is always available on both servers.

• Minimize query response time.

The cube must meet the following technical requirements:

• The cube must be processed by using an SSIS package.

• The cube must contain the prior day’s data up to 8:00 P.M. but does not

need to contain same-day data.

• The cube must include aggregation designs when it is initially

deployed.

• A product dimension must be added to the cube. It will contain a

hierarchy comprised of product name and product color.

Because of the large size of the SalesTransactions table, the cube must store only

aggregations—the data warehouse must store the detailed data. Both the data warehouse and

the cube must minimize disk space usage.

As the cube size increases, you must plan to scale out to additional servers to minimize

processing time.

The data warehouse must use a star schema design. The table design must be as denormalized

as possible. The history of changes to the Customer table must be tracked in the data

warehouse. The cube must use the data warehouse as its only data source.

Security settings on the data warehouse and the cube must ensure that queries against the

SalesTransactions table return only records from the sites to which the current user has

access.

The ETL process must consist of multiple SSIS packages developed in a single project by

using the least amount of effort. The SSIS packages must use a database connection string

that is set at execution time to connect to the financial database. All data in the data

warehouse must be loaded by the SSIS packages.

You must create a Package Activity report that meets the following requirements:

• Track SSIS package execution data (including package name, status,

start time, end time, duration, and rows processed).

• Use the least amount of development effort.

###EndCaseStudy###

You need to design a cube partitioning strategy to be implemented as the cube size increases.

What should you do?

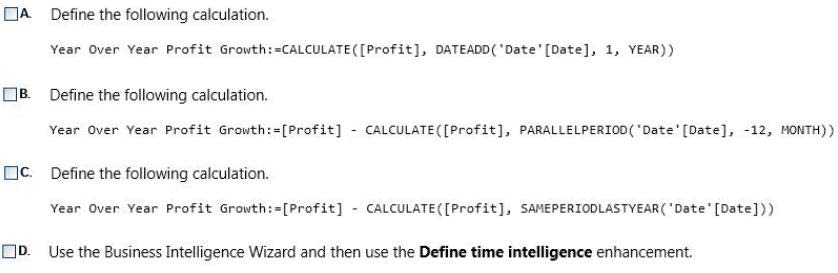

You need to create a measure to report on year-over-year growth of profit

You are developing a SQL Server Analysis Services (SSAS) tabular project. A model defines

a measure named Profit and includes a table named Date. The table includes year,

semester, quarter, month, and date columns. The Date column is of data type Date. The

table contains a set of contiguous dates.

You need to create a measure to report on year-over-year growth of profit.

What should you do? (Each answer presents a complete solution. Choose all that apply.)

Which code segment should you use to implement the Conversions assembly?

###BeginCaseStudy###

Case Study: 5

Litware, Inc

Overview

General Overview

You are a database developer for a company named Litware, Inc. Litware has a main office in

Miami.

Litware has a job posting web application named WebApp1. WebApp1 uses a database

named DB1. DB1 is hosted on a server named Server1. The database design of DB1 is shown

in the exhibit. (Click the Exhibit button.)

WebApp1 allows a user to log on as a job poster or a job seeker. Candidates can search for

job openings based on keywords, apply to an opening, view their application, and load their

resume in Microsoft Word format. Companies can add a job opening, view the list of

candidates who applied to an opening, and mark an application as denied.

Users and Roles

DB1 has five database users named Company, CompanyWeb, Candidate, CandidateWeb, and

Administrator.

DB1 has three user-defined database roles. The roles are configured as shown in the

following table.

Keyword Search

The keyword searches for the job openings are performed by using the following stored

procedure named usp_GetOpenings:

Opening Update

Updates to the Openings table are performed by using the following stored procedure named

usp_UpdateOpening:

Problems and Reported Issues

Concurrency Problems

You discover that deadlocks frequently occur.

You identify that a stored procedure named usp_AcceptCandidate and a stored procedure

named usp_UpdateCandidate generate deadlocks. The following is the code for

usp_AcceptCandidate:

Salary Query Issues

Users report that when they perform a search for job openings without specifying a minimum

salary, only job openings that specify a minimum salary are displayed.

Log File Growth Issues

The current log file for DB1 grows constantly. The log file fails to shrink even when the daily

SQL Server Agent Shrink Database task runs.

Performance Issues

You discover that a stored procedure named usp_ExportOpenings takes a long time to run

and executes a table scan when it runs.

You also discover that the usp_GetOpenings stored procedure takes a long time to run and

that the non-clustered index on the Description column is not being used.

Page Split Issues

On DB1, many page splits per second spike every few minutes.

Requirements

Security and Application Requirements

Litware identifies the following security and application requirements:

• Only the Administrator, Company, and CompanyWeb database users must be able to

execute the usp_UpdateOpening stored procedure.

• Changes made to the database must not affect WebApp1.

Locking Requirements

Litware identifies the following locking requirements:

• The usp_GetOpenings stored procedure must not be blocked by the

usp_UpdateOpening stored procedure.

• If a row is locked in the Openings table, usp_GetOpenings must retrieve the latest

version of the row, even if the row was not committed yet.

Integration Requirements

Litware exports its job openings to an external company as XML data. The XML data uses

the following format:

A stored procedure named usp_ExportOpenings will be used to generate the XML data. The

following is the code for usp_ExportOpenings:

The stored procedure will be executed by a SQL Server Integration Services (SSIS) package

named Package1.

The XML data will be written to a secured folder named Folder1. Only a dedicated Active

Directory account named Account1 is assigned the permissions to read from or write to

Folder1.

Refactoring Requirements

Litware identifies the following refactoring requirements:

• New code must be written by reusing the following query:

• The results from the query must be able to be joined to other queries.

Upload Requirements

Litware requires users to upload their job experience in a Word file by using WebApp1.

WebApp1 will send the Word file to DB1 as a stream of bytes. DB1 will then convert the

Word file to text before the contents of the Word file is saved to the Candidates table.

A database developer creates an assembly named Conversions that contains the following:

• A class named Convert in the SqlConversions namespace

• A method named ConvertToText in the Convert class that converts Word files to text

The ConvertToText method accepts a stream of bytes and returns text. The method is used in

the following stored procedure:

Job Application Requirements

A candidate can only apply to each job opening once.

Data Recovery Requirements

All changes to the database are performed by using stored procedures. WebApp1 generates a

unique transaction ID for every stored procedure call that the application makes to the

database.

If a server fails, you must be able to restore data to a specified transaction.

###EndCaseStudy###

You need to implement a solution that addresses the upload requirements.

Which code segment should you use to implement the Conversions assembly?